AI Goes to College No. 6: Does AI hurt critical thinking, integrating Google Docs and Gemini, and some great new tools

Helping higher education professionals navigate the world of generative AI

In this issue ...

A terrible new anti-AI detection "tool"

Does AI hurt critical thinking and academic performance?

How not to talk about AI in education

Claude 3 takes the lead

Using Google Docs with Gemini

Claude 3 Haiku - Best combination of speed and performance?

The Curricula - A glimpse of what AI can be

Rant of the week

A laughable new anti-AI detection tool

Recently, I learned about an anti-AI detection tool through a newsletter. (I subscribe to LOTS of AI-focused newsletters so you don't have to!) I'm not going to put the name of the "tool" in the newsletter, but I'm happy to tell you if you want to know. Just email me at craig@aigoestocollege.com. For now I'll just call it "Cheat."

I was curious about how well this paraphraser would work. My fellow faculty, you do not need to be concerned, at least not yet. The results were so bad they were funny.

Since I'd been working on an episode of my other podcast, Live Well and Flourish (https://www.livewellandflourish.com/). The episode is about finding hope in troubled times.) (Is it just me, or are there lots of parentheticals in this article?)

There's a word limit to the free version Cheat, so I just pasted in part of what ChatGPT wrote. Here's what I entered:

In the midst of an era marked by wars, economic upheaval, and a contentious political climate, the quest for hope becomes not just a personal endeavor but a collective necessity. The proposed podcast episode, "Hope in Strange Times," is a testament to this quest, offering a beacon of light through the synthesis of classic philosophy and modern psychology. This essay delves into the core themes of the episode: finding the good, taking control, and finding a purpose, weaving together a narrative that not only acknowledges the darkness of our times but also illuminates pathways toward hope.

Finding the Good

In challenging times, the ability to find the good—a concept deeply rooted in both Stoic philosophy and positive psychology—becomes a crucial resilience strategy.

Here's what Cheat returned: During times of war, economic depression, and turbulent political unrest when the future seems bleak, the need to look for hope transcends an individual’s interest and becomes a public obligation. “Hope in Strange Times,” the proposed podcast episode, is an attestation to this state of being that allows a ray of light to seep into distressed souls by combining old philosophy with contemporary psychology. This essay explores the central tenets of the episode: Finding the good, Taking control, and Finding a purpose, connecting stories and thread of hope in the otherwise dark hour of humanity.

"Attestation", really? "Ray of light to seep into distressed souls ..." The revised text is terrible and clearly wasn't written by any human student I've ever known. The revision could serve a purpose though; it's a great example of purple prose.

Since I wanted to be fair, I asked Gemini to write a 250 word essay on finding hope in troubled times. I put the first 100 words or so into Cheat. Here's the part I gave Cheat.

Throughout history, humanity has endured wars, economic depressions, pandemics, and countless other trials. Finding hope in these dark hours can feel like searching for a needle in the proverbial haystack. Yet, hope is an inextinguishable flame within the human spirit, and even in the most troubled times, there are ways to rekindle its light.

One way to uncover hope lies in turning inwards and seeking connection with others. Sharing our burdens, finding solace in a listening ear, and extending a hand of support can be profound sources of strength. Remember, you are not alone. In our shared experiences, we find the threads of resilience that bind us together.

Focus on the small victories and acts of kindness that illuminate the darkest of times.

Cheat didn't do any better this time:

Fleetingly, humanity has withstood wars, economic recessions, viruses, and more. Everyone on earth has felt distant hope in these dark hours, alienated like a small needle in a haystack. Indeed, hope is a string of the indomitable human spirit, and in the most troubled times, you can fan it. Perhaps the easiest way is to look inside yourself for a connection with another person. Sharing the accumulation of grief and suffering, taking your sorrows, giving support or a shoulder – these are potent sources of strength. Just remember – you are not alone, and as much as we share experiences, we develop threads of human resilience between us. Finally, consider the small victories or acts of kindness that brighten the glare of the darkest satire.

When was the last time you heard a student say "fleetingly"? My favorite bit is "... alienated like a small needle in a haystack." That's a pretty big shift from the original "Finding hope in these dark hours can feel like searching for a needle within the proverbial haystack."

In both cases, the Cheat rewrite is so bad it's laughable, and pretty quickly identifiable as something other than student writing. Like I said, I don't think we have much to worry about with Cheat.

Bottom line

Here's the larger point: If universities take the approach of banning AI use, or if they don't help students understand appropriate AI use, we're going to have an arms race between AI detectors (which don't work) and AI maskers, such as Cheat (which don't seem to work either). This situation would result in losers all around, except for developers I suppose.

This is why I advocate for embracing AI use among our students, but helping them understand how to use AI ethically and effectively. I'm a pragmatist though, so I realize that faculty will need to rethink some of their assignments and learning assessments to make it harder for students to cheat with AI. (Yeah, I know, here's one MORE thing for faculty to do.)

GAI news

Commentary on article - Is it harmful or helpful

A recent article in the International Journal of Educational Technology in Higher Education has received a lot of attention online. The article is titled "Is it harmful or helpful? Examining the causes and consequences of generative AI usage among university students."

The study is interesting. The authors surveyed almost 500 students about their ChatGPT use, academic workload, time pressure, sensitivity to rewards, sensitivity to quality, procrastination, memory loss, and academic performance. The authors hypothesized that the first four affected ChatGPT use, which in turn affected the last three.

The authors found that ChatGPT use had a negative effect on academic performance and positive effects on procrastination and memory loss. In other words, their findings seem to establish that ChatGPT use hurts academic performance and leads to memory loss and procrastination. In other words, ChatGPT use is bad. At least that's what many online commenters concluded.

Not so fast. Do the findings really say that? When I dug into the article, I wasn't able to draw the same conclusions. Overall, the article isn't bad. The authors check a lot of the normal methodological rigor boxes and they collected data in three waves, which was a good approach. There are some serious problems, though, when it comes to drawing the conclusion that ChatGPT use is harmful. Let's do a breakdown.

First, they didn't measure changes in procrastination, memory loss or academic performance. They measured students' perceptions of these at a single point in time. So, we really don't know if these things changed during the period of ChatGPT use. This is a subtle, but critical point. I think their findings can establish that there are correlations between ChatGPT use (or at least their measure of ChatGPT use) and students perceptions of procrastination, memory loss, and academic performance, but that doesn't mean that ChatGPT caused these things. Causality is really hard to establish and they haven't made a convincing case for causal effects.

Second, they only waited two weeks between collecting data on ChatGPT use and on the three outcome variables. I'm not sure this is long enough for ChatGPT use to have any meaningful effects. It might be, but I don't think so. It seems to me that it would take quite some time for ChatGPT use to move the bar on procrastination, academic performance, and memory loss.

Finally, although the authors collected their data in a reasonable way, I wonder about the direction of the effects. I think I could make a pretty good argument that students who procrastinate, think they're performing poorly, or suffer from poor memory might be more likely to use ChatGPT as a tool or as a crutch. So, we're back to the causality problem.

The authors pushed their conclusions a little too far for my taste, although they did use some equivocal language to hedge a bit. For example, they state "... our findings suggested that excessive use of ChatGPT can have harmful effects on students' personal and academic arguments." That's an accurate statement for the most part, but only because they used the word "can." I do take exception with their use of "excessive" since they didn't measure excessive use, they just measured use. I'm not sure what excessive use is, to be frank.

I can't really fault the authors overall. They didn't do anything out of the norm and you can always find quibbles with scholarly work. My problem is that many people, including some in the media, either haven't taken the time or don't have the ability to really understand the article and its findings. They just say "See, ChatGPT is bad for students." I'm also concerned that those who are opposed to generative AI may use this study as "evidence" to argue for blocking its use. Now THAT would be bad.

Abbas, M., Jam, F.A. & Khan, T.I. Is it harmful or helpful? Examining the causes and consequences of generative AI usage among university students. Int J Educ Technol High Educ 21, 10 (2024). https://doi.org/10.1186/s41239-024-00444-7

How not to talk about AI in education

Recently, the London School of Economics posted an article, "How not to talk about AI in education." The article was written by digital education consultant Helen Beetham.

The article is well written and provocative (purposely so, I think). It makes some good points. For example, the author notes that AI isn't a single thing or a single use case. She claims it's best understood "... as a socio-technical project: one with significant implications for education." I totally agree. Most technologies are best understood when we consider how people interact with them and AI is no exception.

Where I start to take exception with the author is when she writes that we shouldn't talk about AI as a collaborator. I think this is exactly how we should be thinking about generative AI. In fact, I'm becoming more and more convinced that the right way think, and talk about generative AI is as co-production; humans and AI tools working together to produce content. AI on autopilot may be good for some uses, but I think co-production is the right way to think about AI for many educational uses. When working well together, humans and AI can produce output more efficiently and effectively than either could working alone.

To be fair, much of what Ms. Beetham wrote under the heading "AI as a collaborator" makes sense. AI tools aren't meaning-makers, they're just synthetic machines that seem like they're creating meaning. But that's not the important thing in co-production. I have an article about AI co-production in the works. It will be in a future edition of AI Goes to College.

There's also a section titled "As the future of work," which seems to imply that we should NOT be talking about AI as the future of work. Again, I think the author is wrong here. AI is currently affecting work in many industries and its effects on work will only increase in the future. What's interesting about much of this article is that the section headings are much more provocative than the text in the sections. The "As the future of work" section is a good example. When I read the heading I immediately had a negative reaction, but when I read the section I largely agreed with the author. One of her main points is that there some workers will gain from AI and others will lose. I totally agree.

Overall, this article is worth reading. Ms. Beetham makes some good points and it's probably good to temper some of the hype around AI. Although I think she's wrong on some of her main points, she raises some others that seem legit to me. Read the article and let me know what you think: craig@AIGoesToCollege.com.

Link to article: https://blogs.lse.ac.uk/highereducation/2024/03/28/how-not-to-talk-about-ai-in-education/

Claude 3 takes the lead from GPT-4

The big generative AI news item from the past week is that Claude 4 Opus seems to have taken the performance mantle from GPT-4. (Claude 4 Opus and GPT-4 are the models underlying the most powerful versions of the popular Claude and ChatGPT chatbots.)

Claude 3 Opus is now the top ranked model according to Hugging Face's crowdsourced Chatbot Arena Leaderboard. The Leaderboard uses over 500,000 human performance votes to rank the various models. The headline is a little misleading though; the spread between Claude 3 Opus and two versions of GPT-4 is very narrow (within the margin of error), probably enough to call it a tie, although Claude 3 Opus does come out on top. By the way, Google's Gemini Pro also came out well, but did lag Claude 3 Opus and GPT-4.

What was more remarkable to me is that the smallest version of Claude 3, Haiku ranked 7th and blew GPT 3.5 out of the water. This is pretty amazing for a small, light, fast model. There's an article about Claude 3 Haiku later in this edition.

The Leaderboard is updated frequently. You can check it out at: https://huggingface.co/spaces/lmsys/chatbot-arena-leaderboard.

Tip of the week

Using Google Docs with Gemini

One of the most intriguing uses of generative AI is to be able to get AI to help you work with your own materials (documents, emails, spreadsheets, etc.). I tried Microsoft's Copilot for Office 365 and found it to be pretty useless. I'm going to try again soon.

Google's Gemini offers some interesting possibilities. You can use Gemini with Google Docs in a couple of ways. One way is from within the document. Just click on the star icon, and a Gemini panel will pop up.

Currently, Gemini will automatically summarize the document. This, by itself, could be useful in many situations. Here's the summary from a recent extended abstract a colleague and I wrote. The summary seems reasonable. Gemini also gives some suggestions for additional prompts. This one is pretty interesting since reviewers basically asked for the same thing!

I went on and asked Gemini for a bullet point overview, which turned out reasonably well. Then, I asked it to create a presentation from the document. At the moment, Gemini can't create the actual slide deck, but it did create a decent structure for the presentation. It was a little detailed for my tastes, but it's not bad and could easily be used to jump-start creation of the actual slides. I'm hopeful that one day Gemini will be able to create the entire slide deck. (Hey, I can dream!)

Since I was already playing around, I asked Gemini what questions were likely to come up during my presentation. The response (below) was a little generic, but not entirely unreasonable. Since this only took a few seconds to do, I might do it for future presentations. You never know, Gemini might come up with something you hadn't thought of, which could be useful.

Although using Gemini within Google Docs seems to work well, I think you get better results from using Gemini's Docs integration function from within Gemini (rather than within Docs). In the example below, I used @Google Docs to access and summarize the same document. By the way, Gemini seems to work fine with Google Docs, but not with .docx files stored in your Workspace.

This is a better, summary, in my opinion. This is a bit of an unfair comparison since I used Gemini Advanced here, but I think the within Docs example used the standard Gemini model. Next, I asked Gemini Advanced to create my presentation. The results were interesting and much better.

Notice that there are far fewer bullet points and each bullet point has less text. Gemini Advanced also included speakers notes, which is a nice addition.

I got curious about how Gemini (the not Advanced version) would do, so I ran the same prompts through regular Gemini. To my surprise, the results were pretty similar.

There might be some minor differences, but this summary looks nearly identical to the one produced by Gemini Advanced, right down to the use of "thus" in the last sentence.

The slide outlines were different across the two versions of Gemini. Actually, I think I like regular Gemini's version better. One very odd aspect of Gemini's response was the inclusion of images for two of the slides. The images are kind of random, and I have no idea why it included images for two slides, but not the others. In fact, I didn't know Google had reinstated Gemini's ability to generate images. (I'm not sure these images were created by Gemini, now that I think about it. It's hard to tell.) I tried to get Gemini to redo the slide response with images for each slide, but it didn't work at all.

Overall, I think Gemini's Docs integration is promising. It still has its problems, though. For example, I was playing around with Gemini in Gmail and I clicked on a suggested prompt (What is this email about?). In a "SMH" (shaking my head) moment, Gemini told me it was still learning and can't help with that. I tried the same prompt with a different message and it worked. Who knows what's going on?

Gemini will integrate with other Google services (see below). I've played around with Google Flights and Maps, but didn't find them useful. Your experience may be different.

The YouTube integration is very interesting, however. My early testing indicates that Gemini is pretty good at summarizing videos. This has huge potential, so I'll do a write-up on this later.

Resources and Tools

The Curricula - An interesting new tool

One of my favorite newsletters, Recommendo (https://www.recomendo.com/subscribe) recently had a blurb about an interesting new AI tool, The Curricula (

https://www.thecurricula.com/

). The Curricula will create an AI-generated learning guide for virtually any topic.

The main page is pretty barebones, but I kind of like it. It's functional, although lacking in details. Using The Curricula is pretty simple. You just enter a topic or you choose from fifteen suggestions.

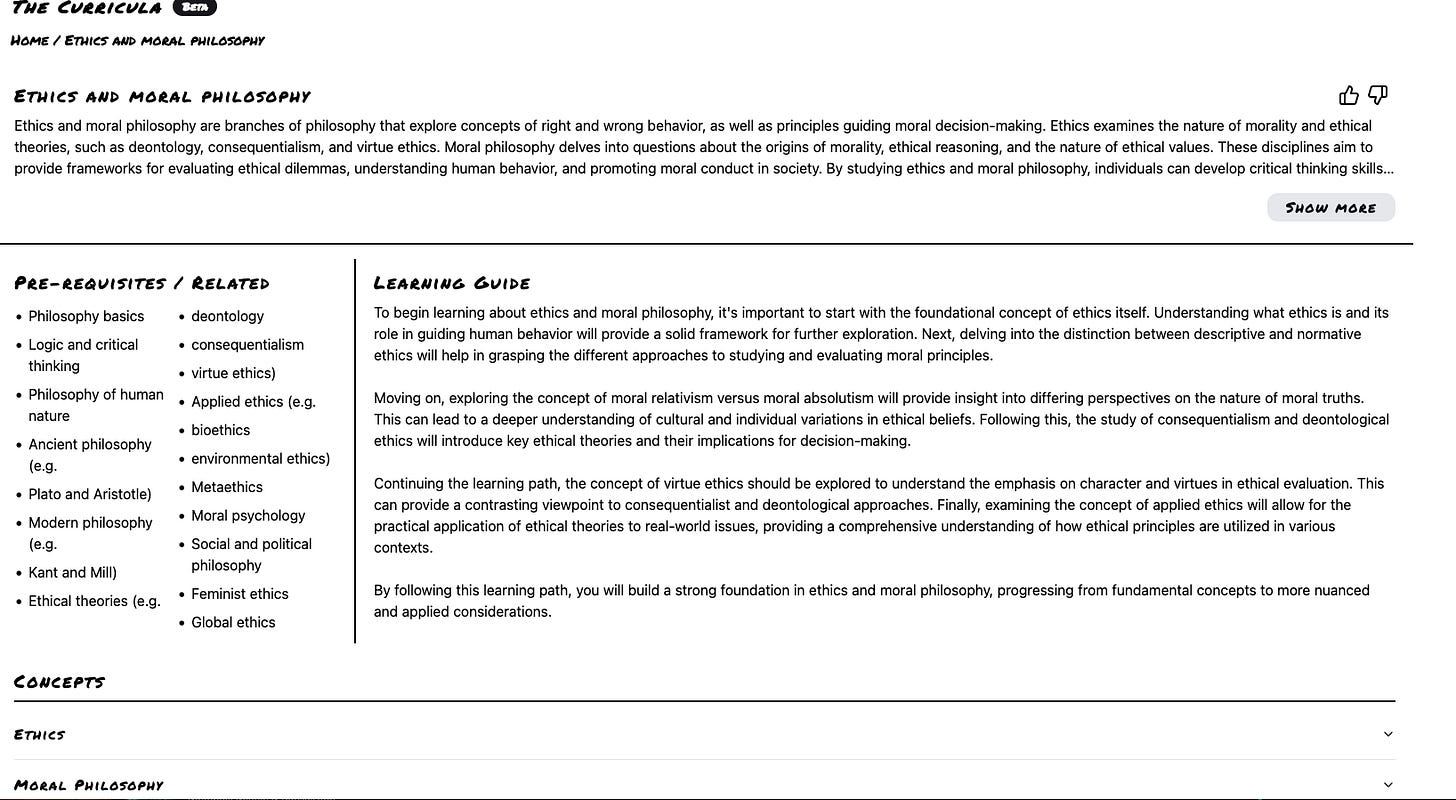

I chose Ethics and Moral Philosophy and got this. (The concept list is cut off in the screenshot. It includes eight important concepts in this case.)

The guide looks pretty reasonable to me, although I wish there were links for the pre-requisite and related topics. The learning guide seems sound, although it's clearly AI generated (which is fully disclosed on the website).

When you click on a down-arrow for one of the concepts, a short description appears, followed by a list of resources (books, articles, videos, and courses).

Overall, this looks pretty good to me. So, I decided to try a test by asking The Curricula to create a learning guide for decision making. Here's what I got. (The screenshot is pretty small, but you can check out the guide here: https://www.thecurricula.com/learn?q=Decision%20making).

Is this learning guide perfect? Probably not, but it's pretty amazing that you can create something this comprehensive in a matter of seconds. Frankly, I'm a little blown away.

One thing the guide didn't cover well was the topic of different decision-making tools. So, I asked the system to create a learning guide for decision-making tools. It did a nice job, including many standard decision-making methods (decision matrix, decision trees and some others). You can check that guide out at https://www.thecurricula.com/learn?q=decision%20making%20tools.

The Curricula has serious potential. I can imagine putting together the basics of a curriculum in a remarkably short period of time. Of course, you'd have to do a lot of tweaking to get things right, and you'd want to carefully verify the learning guide's content, but overall I think it would be a huge time saver.

There are also some clear applications for professional development. Schools could use The Curricula to help plan employee professional development opportunities or individual higher ed professionals could use it to create their own personalized learning guide.

One huge warning though. It's not clear how long The Curricula will be around. It seems to be a personal project from software engineer Mike Dyer through his Chingdogu Labs (

https://www.chindogulabs.com/

). So, it's not clear if The Curricula will ever be a commercial project. Even if it goes to Chindogu Labs' Graveyard of Projects Past, it's a great example of what's possible with generative AI.

Claude Haiku is impressive

There's been a lot of buzz around Anthropic's new Claude models. I wrote about it in issue #4 (https://aigoestocollege.substack.com/p/ai-goes-to-college-no-4). When I wrote that, Claude 3 - Haiku, the small and fast member of the Claude 3 family hadn't been released publicly. It has now and it's impressive.

My initial test of Haiku (what a fun word!) was very promising. It was fast and the output was solid. So, I decided to do a side-by-side comparison of the three Claude 3 models (Opus, Sonnet, and Haiku).

Here's the prompt I used in all three:

I teach a junior level information systems class that's part of the business school core. Act as instructional expert who is also very adept at generative AI. Please give me 10 ways I can adapt my assignments to 1) make it harder for students to use generative AI inappropriately, and 2) leverage generative AI to enhance student learning.

Here's the result from Opus: As an instructional expert with a strong understanding of generative AI, here are 10 ways you can adapt your assignments to prevent inappropriate use of AI and leverage it to enhance student learning in your junior-level information systems class:

Require students to include specific examples from class discussions or readings in their assignments. This ensures they engage with the course material and makes it harder for AI to generate relevant content without human input.

Incorporate reflective writing components where students discuss their learning process, challenges faced, and personal insights gained. AI struggles with generating authentic personal reflections.

Assign project-based learning tasks that involve multiple stages, such as planning, drafting, peer review, and revision. This iterative process is more difficult for AI to replicate convincingly.

Include oral presentations or recorded videos as part of the assignment submission. AI cannot effectively mimic individual students' verbal communication styles and mannerisms.

Utilize AI tools like GPT-3 to generate practice questions, prompts, or scenarios for students to analyze and respond to, enhancing their critical thinking skills.

Encourage students to use AI-powered writing assistants like Grammarly or Hemingway to improve their writing clarity and concision, while emphasizing the importance of maintaining their own voice and ideas.

Assign group projects that require collaboration and division of tasks among team members. AI cannot replicate genuine human collaboration and interaction.

Implement a two-stage assignment process where students first submit an outline or draft, receive feedback, and then submit a final version. This makes it harder for AI to generate a complete, polished assignment in one attempt.

Use AI to create personalized learning experiences by generating content, questions, or feedback tailored to each student's strengths, weaknesses, and interests based on their performance data.

Discuss the capabilities and limitations of AI openly with your students. Encourage them to use AI as a tool to enhance their learning, while emphasizing the importance of academic integrity and developing their own critical thinking and problem-solving skills.

By implementing these strategies, you can create assignments that are more resistant to inappropriate AI use and harness the power of AI to provide students with engaging, personalized learning experiences that foster their intellectual growth and practical skills in information systems.

That's an OK response, although the last two suggestions really aren't about adapting assignments.

Sonnet got even more off track. A few of the suggestions below are decent, but some seem to be suggestions about something other than modifying assignments.

As an instructional expert well-versed in generative AI, I can provide you with several strategies to address both concerns regarding the appropriate and effective use of generative AI in your junior-level information systems class. Here are 10 ways to adapt your assignments:

Personalized Prompts: Craft prompts that require students to incorporate specific details from their personal experiences, backgrounds, or perspectives. This personalization makes it harder for generative AI to produce relevant responses without additional context from the student.

Open-Ended Reflections: Assign reflective writing tasks that require students to critically analyze and synthesize course materials with their own thoughts and opinions. These subjective and open-ended prompts are more challenging for generative AI to replicate effectively.

Data Analysis and Interpretation: Provide students with datasets or case studies and ask them to analyze, interpret, and draw insights from the data. This type of assignment requires human reasoning and domain knowledge that generative AI may struggle with.

Collaborative Projects: Encourage group projects where students collaborate and build upon each other's contributions. The iterative nature of such projects makes it harder for generative AI to produce coherent and consistent outputs.

Multimedia Assignments: Incorporate multimedia elements, such as creating videos, podcasts, or presentations, that require students to demonstrate their understanding through various mediums. Generative AI may have limitations in producing high-quality multimedia content.

Code Review and Debugging: Assign coding tasks that require students to review, understand, and debug existing code snippets or programs. This exercise promotes critical thinking and problem-solving skills that generative AI may struggle with.

Ethical Discussions: Facilitate discussions and debates around the ethical implications of generative AI in various contexts, including information systems and business practices. This encourages students to explore ethical considerations and develop their own informed perspectives.

AI-Assisted Research: Leverage generative AI as a research assistant to help students gather and synthesize information from various sources, enhancing their research skills and broadening their understanding of topics.

Interactive Tutorials: Use generative AI to create interactive tutorials or simulations that allow students to explore and experiment with concepts in a hands-on manner, fostering deeper learning and engagement.

Peer Review and Feedback: Implement peer review processes where students provide feedback on each other's work using generative AI as a tool for analysis and suggestions. This promotes critical thinking, collaboration, and effective communication skills.

By implementing these strategies, you can discourage inappropriate use of generative AI while also leveraging its capabilities to enhance student learning, critical thinking, and engagement with course materials.

Let's see how Haiku does: As an instructional expert with knowledge of generative AI, here are 10 ways you can adapt your assignments to prevent inappropriate use of AI and leverage it to enhance student learning:

Require students to document their learning process, including brainstorming, drafting, and revising. This makes it harder to simply use AI-generated content without demonstrating understanding.

Include reflective components where students discuss their thought process, challenges faced, and insights gained. AI struggles with this type of personal reflection.

Incorporate in-class activities or discussions that build on the assignments, so students need to deeply understand the material and can't just rely on AI.

Use AI detectors like GPT-2 Output Detector to identify AI-generated content. Discuss academic integrity and the appropriate use of AI tools.

Design assignments that require students to apply concepts to unique situations, such as case studies or real-world examples from their own experiences. This is harder for AI to generate.

Have students annotate assignments with their own thoughts, questions, and connections to other course content. This personal layer is difficult for AI to replicate.

Encourage students to use AI tools for brainstorming, outlining, and editing, but require them to substantially refine the AI-generated content and credit the use of AI.

Provide AI-generated examples and have students critique them, identify limitations, or suggest improvements. This develops critical thinking about AI.

Use AI to create interactive simulations or case studies that students can engage with to deepen their understanding of complex topics.

Leverage AI-powered discussion platforms that use natural language processing to provide personalized feedback and prompt deeper student engagement with the material.

The key is to design assignments that require higher-order thinking, personal reflection, and application to novel contexts. By openly discussing the use of AI and encouraging students to critically evaluate and build upon AI-generated content, you can harness its potential while ensuring academic integrity.

It comes as a surprise to me, but I think I like Haiku's response the best. All of these, except for #4 (which is just a bad idea all around) are directly related to modifying assignments.

What's the bottom line here? Don't just assume that the most powerful models will give the best results. Try different models when time and other resources allow. By the way Poe.com is a great resource for trying out various models. I wrote about Poe in the first issue of AI Goes to College (https://aigoestocollege.substack.com/p/ai-goes-to-college-issue-1).