AI Goes to College - No. 4 - New Claude models, an interview, and the best $40 you can spend

Helping higher education professionals navigate generative AI

Welcome to issue 4 of AI Goes to College!

This week's issue includes:

Generative AI's paywall problem

Anthropic release new Claude models that beat GPT

Google has a bad week

Why generative AI doesn't follow length instructions (and what you can do about it)

The best $40 you can spend on generative AI

More Useful Things releases some interesting AI resources

Chain of thought versus few-shot prompting

An interview with WSU's Rob Crossler

Before getting into the main content, I wanted to thank Grammar Nut for catching a typo on the AI Goes to College website. It's great to have sharp-eyed readers!

Rant of the week

Large language models are trained on data. Data that aren't included in the training data aren't reflected in the output. This means that biased data on the input side is reflected in biased output.

I'm not stating anything groundbreaking in the above. Demographic-based bias is a well-known, serious ethical issue related to GAI. But, it recently occurred to me that biased training data results in a different problem for academic researchers. Now, I'm speculating here, since I really don't know what data the various LLMs are trained on. It seems to me, though, that the training data may underrepresent articles from top academic journals, which is a problem for those of us who want to use GAI tools to help with scholarship.

Here's my thinking. Many top journals are behind paywalls of various sorts. Typically, if I want a recent article from a top journal, I either have to access it through a professional association (Association for Information Systems, ACM, IEEE, etc. for me), or through my university's library. Sometimes I get lucky and it's available through Google Scholar or some non-paywalled repository. Eventually, many of these articles do make their way around the paywalls to become freely available, and more journals are adding open access options (for a hefty fee).

The training data could include abstracts, and citations from included articles can also introduce paywalled articles into the training data, but only in limited ways. The full text of the article would not be in the training data, which means that the article can't be fully represented in outputs.

The problem may be even greater for disciplines that rely more on books than journal articles. The extent of the problem (if it exists) is not clear to me, but I think I'll run some experiments to try to get a better understanding of this potential training data bias problem. For now, I advise caution. Don't over-rely on GAI shortcuts to replace the hard work of scholarship. They can help, but we still have to put in good old human thought and effort. Stay tuned for future developments (and rants).

Generative AI news

Anthropic releases new Claude models (and they're outstanding)

The big news this week comes from OpenAI competitor Anthropic, who released a new family of their Claude large language models. Claude is similar to GPT. The models perform similar functions and both families are available through an application programming interface (API) and through chatbots.

Anthropic released three models:

Claude 3 Haiku

Claude 3 Sonnet, and

Claude 3 Opus.

The names are kind of clever since the names are loosely tied to the size and capabilities of the models. Haiku is fast and compact, but it's best for simple requests. In other words, it's the small, fast member of the family. Claude 3 Opus is the big dog model. It's the most intelligent of the three models and is intended for highly complex tasks, but it's also more expensive. Sonnet takes the middle ground, providing solid performance at a substantially lower cost than Opus.

Both Sonnet and Opus have 200K context windows. The context window is how much data the model can consider when creating its output. Think of it as the model's memory capacity. Roughly (VERY roughly) a 200K context window should be able to handle 300 to 400 pages of text. Opus can provide a context window of up to 1 million for some uses, but that's reserved for special cases. The 200K context is twice that of earlier versions of Claude.

Be careful though. I access the Claude models through Poe. In Poe, Claude 3 Opus and Claude 3 Opus - 200K are very different models. According to Anthropic, Claud 3 Opus is a smaller model with about 1.3 billon parameters and Claude 3 Opus 200K has 200 billion parameters. The number of parameters is the number of values in the neural network that defines the model's knowledge and behavior.

The context window is a big deal; larger windows open up new possibilities. For example, Claude 3 can summarize and synthesize multiple journal articles in a singles session. I'll be testing this capability soon and will let you know how it goes.

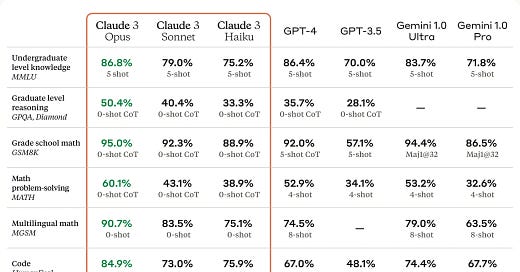

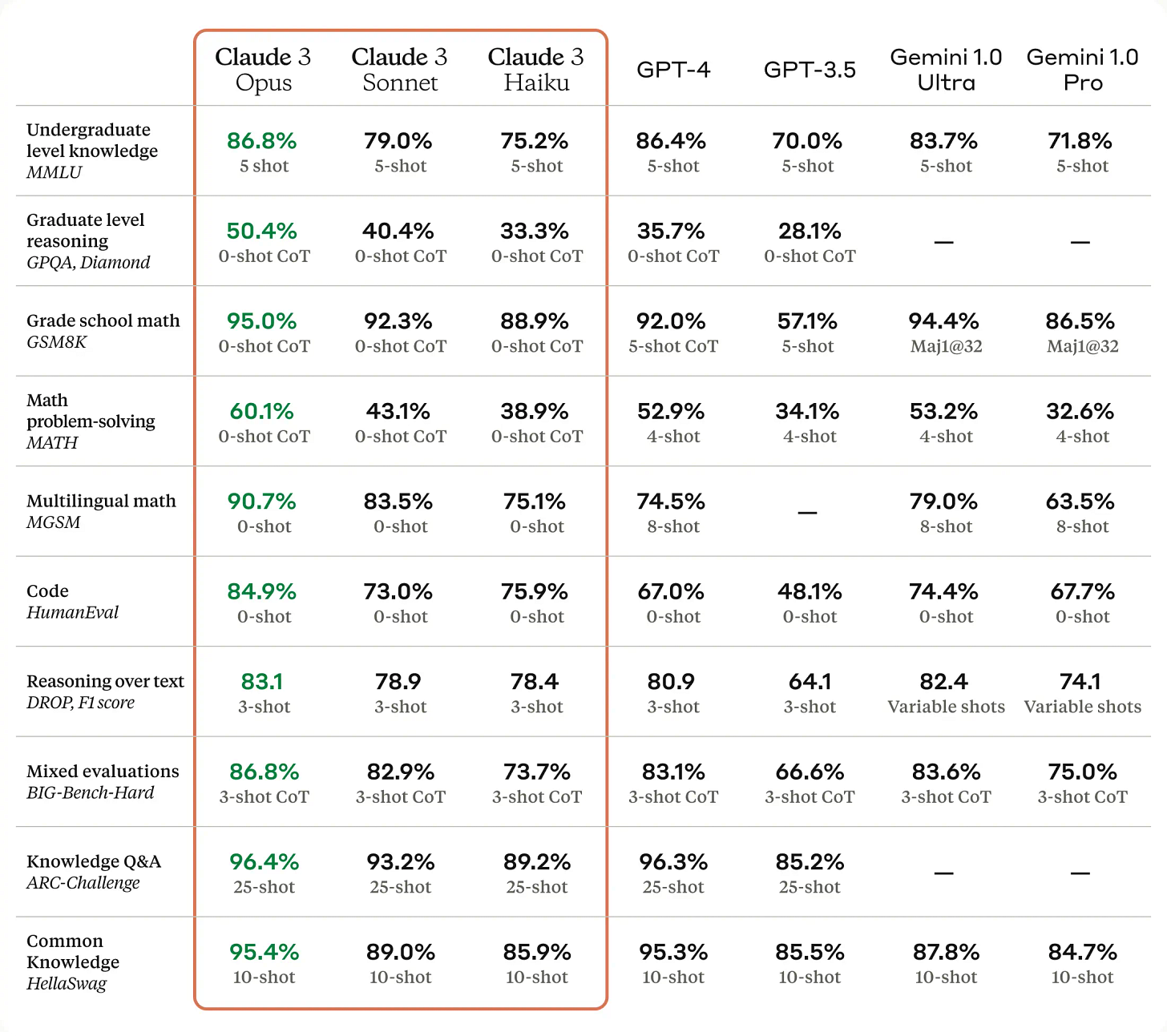

Another big deal about the Claude 3 announcement is that, according to Anthropic, Claude 3 outperforms GPT across the board. Check out this table, which is from Anthropic's announcement of the new Claude models (Source: https://www.anthropic.com/news/claude-3-family).

The results are impressive. Claude 3 Opus outperforms GPT-4 and Gemini Ultra across the board. Claude 3 should also have fewer hallucinations than the earlier models. I’m always a little skeptical of how well benchmark tests equate to real-world performance, but these results argue for checking out Claude 3.

My initial test of Claude 3 Opus was interesting and not very satisfying, but I think some of this was my fault. I'll provide details in a later edition of AI Goes to College. By the way, the Claude 3 models are available in paid users of Poe, which is another reason to check out Poe.com if you haven't already done so.

The bottom line is that the release of the Claude 3 models is a good thing. I hope the various GAI vendors continue to push each other and therefore expand what's possible with these tools.

Google's bad week and the future of generative AI

Google was in the news recently, and not in a good way. They had to pause Gemini's image generation function due to complaints from users. As of March 8, 2024, the function is still unavailable.

The basic problem was that Gemini was creating some ... interesting images, America's Founding Fathers being black, and the Pope as a woman, among others. The world being what it was, there was considerable outrage, although some of us had a bit of a chuckle over the incidents.

According to Google Senior Vice President Prabhakar Raghavan, the problem came about through fine tuning of Gemini's image generation model (Imagen 2). The fine tuning was intended to prevent the tool from "... creating violent or sexually explicit images or depictions of real people." Engineers were also trying to ensure gender and ethnic diversity.

Unfortunately, it seems like Google overcorrected, which resulted in some curious and historically inaccurate images. Get used to this. Widespread generative AI is still very new and we're still trying to figure out how to implement appropriate guardrails. In fact, there's not even widespread agreement on what those guardrails should be, although there are many ongoing conversations about appropriate guardrails.

So, it's likely that we'll continue to see problems, followed by overcorrections; these will go back and forth like a pendulum until we find the right balance between freedom of use and freedom from harm. I'm confident that we'll get there eventually.

In the meantime, I think there's an important message here for higher ed professionals. Before relying on generative AI for anything important or anything that might be seen by the public, be sure to subject the output to human review. Make a human check part of your processes whenever you use generative AI. That sort of review might not have been practical for Google, but it almost surely is for most of us in higher ed. Remember, "AI made me do it" is not a valid excuse when things go wrong.

Here are some links in case you want more details:

https://www.npr.org/2024/02/28/1234532775/google-gemini-offended-users-images-race

https://blog.google/products/gemini/gemini-image-generation-issue/

Tip of the week

Why doesn't generative AI follow length instructions?

Friend and listener, Ralph, sent me an email asking me why generative AI is so bad at following instructions about output length. Ralph has an awesome daily podcast that focuses on financial health; I highly recommend it. Check it out at https://www.askralphpodcast.com/. You'll be glad you did!

Many of you have probably experienced the length problem. You tell ChatGPT (or some other tool) to produce output of 500 words and there's no telling how long the output will be (but it won't be 500 words!). You might get 200 words or 1,000. This can be pretty frustrating. I decided to ask Gemini about this persistent problem with AI tools. I actually kind of liked Gemini's response, so I decided to include it here verbatim. You can follow the entire conversation here: https://gemini.google.com/app/a50f7d149eca00c1; the most relevant bits are below.

[Prompt]

Let's try this. Please write a blog post of between 350 and 500 words on how to prompt GAI so that it will produce output of the desired length. Make the tone authoritative, but conversational. Do you need any other details in order to complete this task?

[Response] (Disclaimer: This is Gemini's response; I didn't write it and I didn't edit it.)

Taming the Word Count: How to Get the Right Length from Your AI Chatbot

Ever ask an AI chatbot for a short story and end up with a novel? Or maybe a quick explanation ballooned into a textbook chapter? Don't worry, you're not alone. Getting AI to stick to a word count can be a bit like herding cats, but it's definitely doable.

Why AI Word Counts Get...Creative

First, remember that AI language models aren't word processors. They work with text differently than we humans do, and they're constantly trying to be helpful and comprehensive. This sometimes means they decide 300 words just doesn't cut it to fully explain the intricacies of quantum physics.

The Tricks of the Trade

So, how do we wrangle those word counts? Here are some essential tips:

Give a Range, Not a Target: Instead of demanding a strict 500 words, try something like "between 350-500 words." This gives your AI friend some breathing room.

Show, Don't Just Tell: Provide examples of writing that fits the length you want. These act as awesome templates for the AI to follow.

Expand Carefully: Start smaller and build up. Ask for a short summary first, then request more detail on a specific point. This gives you more control.

Words Matter: Sometimes, how you phrase your request makes a difference. "Summarize this topic in about 400 words" might work better than "Write 400 words on this topic."

Remember, It's a Process!

Getting the perfect length every time takes some practice. Think of working with AI like a conversation; you'll both learn how to communicate better with a little trial and error.

Need more details? I'm happy to tweak this further. Would you like it to be more technical, include specific examples, or focus on a particular type of AI output (like stories vs. reports)?

Resources and Tools

The best $40 you can spend on generative AI

Monthly software subscriptions are getting out of hand. I'm afraid to even think about how much I'm spending each month at $5, 10 or 20 a pop. Despite this, I recently added a $40 per year subscription and it's already proving to be among my best software investments ever. This investment not only helps with my GAI use, it also helps with writing virtually anything and, perhaps best of all, it tremendously speeds up my grading.

What, pray tell, might this magical bit of code be? No, it's NOT another GAI tool, it's a text expander. A text expander replaces a short bit of text with a longer bit. For example, when I want to type the web address for AI Goes to College, I simply type ";uaig" and the text expander types https://www.aigoestocollege.com/. (The ";" is used to indicate that what follows may be the abbreviation for a text snippet. The semi-colon works well because it's usually followed by a space.) Saving a second or two doesn't sound like a big deal, but in the aggregate it can save a tremendous amount of time. Text expanders can work with larger chunks of text. I often have to provide my biography for various purposes. Usually, I'd have to go find the Word file and either attach it to an email or copy and paste it into a message. Now I simply type ";bio" and my bio pops up.

What does this have to do with GAI? Well, as you become more experienced with GAI, you'll find yourself entering the same instructions and prompts over and over. I use "What do you think?" frequently. Now, I just type ";wdyt". As your prompts get more complex, text expanders become even more useful. Here's an example.

Since I use GAI for a lot of tasks, I often have to provide some context. For example, I try to tell GAI whether I'm working on something related to my teaching, or one of my podcasts or this newsletter. When I'm working on AI Goes to College, I start with the following:

I'm working on a newsletter and companion podcast called "AI Goes to College." The goal of the project is to help higher education professionals (faculty and staff) navigate generative AI (GAI) and its impacts on higher education. The newsletter and podcast will 1) inform the audience about recent relevant developments in GAI as it relates to higher education, 2) provide tips and hints about using GAI effectively and ethically, and 3) discuss important issues related to the intersection of GAI and higher education. Please use this description when developing your responses.

So, I set up a text expander abbreviation (;daig) for these instructions. So, instead of having to retype the instructions every time, or copying and pasting from a document, I just type five characters. I use TextExpander (https://textexpander.com/) since it works with PCs and Macs. There’s a 30-day trial and it’s only $40 per year or $4.16 per month after the trial. There are numerous other text expanders, but I’m pretty happy with TextExpander. I want to give a little shout-out to Dave Jackson (https://schoolofpodcasting.com/). Dave mentioned text expanders on an episode of his podcast, so I started checking them out and they’ve saved me a TON of time since them. Thanks, Dave!

More Useful Things - AI Resources

Ethan Mollick, creator of the One Useful Thing newsletter (which is VERY useful) and Lilach Mollick recently released a new website, More Useful Things (https://www.moreusefulthings.com/prompts).

More Useful Things includes an AI resources page that provides (surprise, surprise) some useful AI resources. Ethan is one of my favorite resources on the intersection of AI and education, so I was excited to see this new resource.

There are three sections: Ethan's book (Co-Intelligence: Living and Working with AI), which I've pre-ordered and look forward to reading, a prompt library, and "other" resources. Other resources includes a nice video on AI along with links to their AI teaching and learning focused research.

My focus here is on the prompt library. It includes prompts to help instructors prepare for teaching, prompts related to student activities, and an "other prompts" section that provides some miscellaneous prompts, including one called "Academic Paper Creator for GPT4," which looks interesting and concerning.

Instructor aid prompts include some interesting examples such as a simulation creator, a quiz creator and an active learning co-creator, among others. Some examples of Student exercises include general tutor prompts for GPT4, Claude, and Gemini Advanced, negotiation simulators and an interesting looking devil's advocate prompt.

The length and complexity of the prompts surprised me. The simulation creator prompt is over 600 words long. There's nothing wrong with this at all. In fact, such complex prompts are often very effective. But, don't let the complexity of these prompts scare you. You can accomplish a lot with pretty simple prompts; both simple and complex prompts have their places.

I recommend checking out More Useful Things. Take a close look at some of the prompts and I think you'll learn some things about how to structure complex prompts. Their examples may also give you some new ideas about how you can use generative AI.

Keep in mind that complex prompts like these are worth developing when you're going to reuse them frequently. Otherwise, using simple prompts and refining the output may be a better approach.

Chain of thought versus few-shot prompting

Recently, I was listening to an episode of Dan Shipper's "How Do You Use ChatGPT?" Dan was interviewing Nathan Labenz on how he uses ChatGPT as a "copilot" for learning. As always, the episode was interesting. One thing that caught my attention was the discussion of chain of thought versus few shot prompting. Fair warning: This is a little advanced, although I've done my best to keep things straightforward and easy to understand.

Few shot prompting is pretty easy to understand. Just follow your task description with a few examples.

Chain of thought prompts are more complex. Briefly, chain of thought prompting basically asks ChatGPT (or another generative AI chatbot) to think aloud. Here's an example of a chain of thought prompt that asks ChatGPT to explain chain of thought prompting. (By the way, I asked ChatGPT to create this prompt for me, which can be a good way to develop prompts.)

Explain the concept of chain of thought prompting using the chain of thought approach. Begin by defining what chain of thought prompting is. Next, break down the process into its key components, explaining each one step by step. Then, illustrate how these components work together to guide an AI in processing and responding to complex tasks. Finally, conclude by summarizing the advantages of using chain of thought prompting in AI interactions, especially in educational contexts.

Here's the result: To explain the concept of chain of thought prompting using the chain of thought approach, let's start by defining what chain of thought prompting is. Chain of thought prompting is a method of interacting with AI models where the user guides the AI through a series of logical steps or a thought process. This approach is used to tackle complex problems or questions that require more than a simple, direct answer.

Now, let's break down the process into its key components and explain each one step by step:

Identifying the Problem: The first step is to clearly state the problem or question that needs to be addressed. This sets the stage for the AI to understand what it needs to solve.

Decomposing the Problem: Here, the problem is broken down into smaller, more manageable parts. This step is crucial because it simplifies the problem, making it easier for the AI to process and address each part sequentially.

Sequential Reasoning: The AI then goes through each part of the problem, applying logical reasoning. This involves step-by-step processing, where the AI not only identifies relevant information but also explains how it is using this information to reach a conclusion.

Synthesizing the Solution: After addressing each part, the AI synthesizes the information from all the steps to form a comprehensive answer or solution to the original problem.

Reflection or Further Implications: Optionally, the AI can reflect on the solution, providing insights into its significance or the implications of the solution.

Now, let's illustrate how these components work together to guide an AI in processing and responding to complex tasks. When presented with a complex problem, the AI first understands and defines the scope of the problem. It then breaks the problem down into smaller steps, addressing each step with logical reasoning. This process is transparent, allowing the user to see how the AI is thinking and how each step leads to the next. Finally, the AI combines all these steps to present a final solution that is not only accurate but also explained in a way that is easy to understand.

In conclusion, the advantages of using chain of thought prompting in AI interactions, especially in educational contexts, include promoting a deeper understanding of the problem-solving process, enhancing the transparency of the AI's thought process, and potentially leading to more accurate and detailed answers. This approach is particularly beneficial in education, as it mirrors the way humans solve problems, making it a valuable tool for teaching and learning complex concepts.

Wow! That's a lot of explanation.

So, which should you use? Well, that depends. For most tasks, I think few-shot prompting works pretty well. But few-shot prompts require knowing what good output will look like. That's not always the case. Also, you'll get better results with chain-of-thought if you're tackling a complex problem.

If you're a beginner, stick with few-shot prompts. Even one-shot prompts, which include only a single example will work well for many tasks. I often don't include an example at all. If the results aren't appropriate, I'll try a different approach.

There are two messages here. First, keep things simple when you can. Simple is often effective. Second, don't be afraid to experiment with different approaches and even to blend approaches. Prompting can be simple or complex. The choice is yours.

Interview with Dr. Rob Crossler of Washington State University

Last week, Dr. Rob Crossler of WSU and I sat down and had a fascinating discussion about generative AI and how it's affecting higher education. Rob is an accomplished scholar, department chair, and one of those folks who always has some interesting insights. (He's also my friend and co-author.)

There's not enough room in the newsletter to relate our entire conversation, so I highly recommend checking out the interview on the AI Goes to College podcast, which is available at https://www.aigoestocollege.com/ and on all major podcast apps. Here are some highlights.

Rob shared how he's using generative AI to create more creative assignments and to generate questions. We also discussed how to use AI tools to help students explore and understand important concepts.

Rob emphasized the importance of being willing to experiment with AI and accepting that experiments sometimes fail.

We talked about the importance of helping students become confident, but critical users of AI tools.

We shared some of our favorite examples of using generative AI in our classes.

Rob and I also discussed how AI will affect the job market and what that means for our students.

Rob shared some interesting administrative uses for generative AI.

Rob is a really smart guy with a lot of experience in higher ed, so I urge you to check out the full interview.