In just the last couple of weeks, we’ve seen a flurry of new generative AI reasoning models: Deepseek, OpenAI’s o3-mini, and Gemini Advanced 2.0 Flash Thinking to name a few. These new models are fundamentally different from prior models in the way they function. I’m going to simplify here, but the basic idea is this. The “old” models (such as GPT-4o, which really isn’t that old) rely on probabilities and pattern matching, generating answers without first carefully thinking through the problem or task. You can force the models to think first, then answer by using techniques like chain-of-thought prompting, but that can get complex and isn’t entirely reliable.

How Reasoning Models Work

Reasoning models are different. They think through how to go about carrying out the task BEFORE responding. Only after understanding how to go about answering do the models actually respond. Here’s an example. I asked ChatGPT o3-mini the following question:

Why is the advance of reasoning models (in generative AI) such an important development? How do they differ from models like GPT 4o?

Before responding, ChatGPT thought for nine seconds. Here is its description of its thought process.

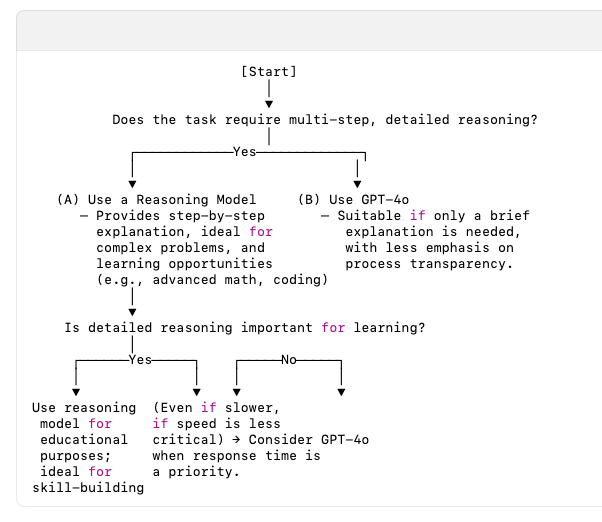

You can see how it first thought about the question before answering it. Here’s an even more interesting example. I asked ChatGPT to create a decision tree to guide me on choosing whether to use a reasoning model or regular model to complete a task. This time ChatGPT thought for 12 seconds.

Again, you can see each step in its thinking process. (The decision tree is at the end of this article, if you’re interested.)

A Deep Dive into AI Reasoning

o1-mini is pretty stingy in its reasoning explanations. Gemini 2.0 Flash Thinking is much more verbose … MUCH more. When I gave Gemini the original prompt about reasoning models, it responded with this (keep scrolling, it’s pretty long!).

Here's a thinking process to construct the answer about the importance of reasoning models and their difference from models like GPT-4o:

Deconstruct the Question: The prompt has two main parts:

Why are reasoning models important?

How do they differ from models like GPT-4o?

Address the "Importance" of Reasoning Models (First Part): Think about the limitations of current generative AI. What are they not good at? This naturally leads to the advantages of reasoning models.

Brainstorm Limitations of Current Generative AI (like GPT-4o in its non-reasoning modes):

Hallucinations (factual inaccuracies).

Lack of true understanding (surface-level pattern matching).

Difficulty with novel situations (training data dependence).

Inability to explain why they give an answer.

Limited capacity for complex problem solving that requires multi-step inference.

Susceptibility to adversarial attacks (manipulation).

Bias amplification.

Connect Limitations to the Need for "Reasoning": For each limitation, ask: "How would reasoning models address this?"

Hallucinations -> Reasoning can verify facts, check consistency.

Lack of understanding -> Reasoning implies deeper comprehension of relationships, causality.

Novel situations -> Reasoning allows applying learned principles to new contexts, not just memorized patterns.

No explanations -> Reasoning processes can be traced and explained, offering transparency.

Complex problem solving -> Reasoning supports step-by-step deduction, planning, and strategic thinking.

Adversarial attacks -> Reasoning may make models more robust by understanding the underlying logic, not just surface features.

Bias -> Reasoning might help identify and mitigate biases by analyzing the underlying assumptions and logic.

Categorize the Benefits: Group the addressed limitations into broader themes. "Trustworthiness," "Problem-Solving Power," "Adaptability," "Explainability," are good high-level categories.

Elaborate on each Benefit: For each theme, provide concrete examples and explain why reasoning models are important in achieving that benefit. Think about real-world applications.

Address the "Difference" from Models like GPT-4o (Second Part): Focus on how reasoning models are different in their architecture and approach compared to models like GPT-4o. A good comparison requires understanding what GPT-4o is (or models in its general category).

Characterize GPT-4o (and similar models):

Primarily based on large language models (LLMs) and transformer architectures.

Trained on massive datasets to predict the next word.

Excel at pattern recognition, text generation, translation, summarization, etc.

Implicitly learn some "reasoning" from data, but it's more correlational and pattern-based than explicit.

"Black box" nature – internal workings not always transparent.

Define "Reasoning Models" and their Key Features: What makes them distinct?

Explicit Reasoning Mechanisms: Key difference. Think about what these mechanisms could be:

Symbolic reasoning (logic, rules).

Knowledge graphs and ontologies.

Causal reasoning.

Probabilistic reasoning.

Planning algorithms.

Step-by-step deduction.

Decomposition of problems.

Integration of Knowledge: May use structured knowledge bases, not just raw text.

Transparency and Explainability: Built-in ability to show how they arrived at an answer.

Focus on Truth and Validity: Aim for logically sound conclusions, not just statistically likely text.

Structure the "Difference" Section: Use a comparative approach. Highlight the key contrasting features. A table might be too rigid, but structured paragraphs work well. Focus on:

Underlying mechanism (pattern matching vs. explicit reasoning).

Knowledge representation (raw text vs. structured knowledge).

Explainability (implicit vs. explicit).

Reliability (statistical vs. logical soundness).

Generalization (data-driven vs. principle-driven).

Refine and Structure the Answer:

Introduction: Start with a clear, concise statement acknowledging the importance and the core distinction.

Importance Section: Use clear headings for each benefit (Trustworthiness, Problem-Solving, etc.). Use bullet points for clarity within each section.

Difference Section: Use clear headings to distinguish between GPT-4o type models and Reasoning Models. Use bullet points to highlight key contrasts.

Conclusion: Summarize the key takeaways and reiterate the future potential.

Review and Enhance:

Clarity and Conciseness: Remove jargon or explain technical terms. Ensure the language is accessible.

Completeness: Have I addressed all aspects of the question? Are there any important nuances missing?

Accuracy: Are my descriptions of GPT-4o and reasoning models accurate and up-to-date?

Flow and Logic: Does the answer flow logically? Is the structure clear and easy to follow?

Examples: Add concrete examples where appropriate to illustrate the benefits and differences. (Though the example provided in the prompt is quite high-level, concrete examples in areas like scientific discovery, medical diagnosis, etc., could be imagined.)

By following these steps – deconstruction, brainstorming, categorization, comparison, structuring, and refinement – you can construct a comprehensive and well-organized answer that addresses all aspects of the prompt.

Wow! That is impressive. If you take the time to read all of the above, you’ll see that it’s a reasonable way to reason through the problem. There are infinite ways to approach such a problem, but this process of deconstruction, brainstorming, categorization, structuring, and refinement is certainly sound.

Gemini’s answer was, in my opinion, much stronger than ChatGPT’s. It even created a useful table of the differences between reasoning models and GPT-style models. The first part of the table is below. If you want to see the full table, you can check it out here. By the way, Gemini has a one-click “Export to Sheets” function, which made it really easy to create the table in the link.

AI Evaluating AI

Taking this a step further, I asked ChatGPT 03-mini to analyze Gemini's thinking process. The critique was both detailed and insightful, examining each step of Gemini's methodology. ChatGPT's summary captured the essence:

Overall, your process is comprehensive and logically sound. It not only lays out a clear pathway for discussing the importance of generative AI reasoning models but also ensures that you cover both their theoretical benefits and practical distinctions from current models like GPT-4o. This methodical approach should lead to a well-argued and insightful final answer.

It’s nice to see AI chatbots complement each other, but what’s especially interesting here is how one AI model can systematically evaluate another model’s reasoning approach, representing the kind of meta-cognitive analysis that reasoning models shoot for. The constructive nature of this AI-to-AI analysis makes me wonder if we’re entering an era in which it makes sense to use AI models to meaningfully build on each other’s work.

Implications for higher education

What does this mean for higher education? I see two important developments here. First, if used cleverly, we can use reasoning models to help students improve their critical thinking skills. Here’s an example of how we might do this.

Give students a problem or task that involves multi-step reasoning, such as whether to take a job. Almost any complex problem would do.

Ask students to engage in some meta-cognition and then write out the steps they would take to solve the problem. Don’t ask them to actually solve the problem, just have them record how they would reason through the problem.

You pose the same problem to a reasoning model, then give students the breakdown of the model’s thinking process. (You could also have students do this themselves.)

Ask students to compare their reasoning process to that of the reasoning model. Have them comment on the differences and similarities.

Direct students to adjust their reasoning plan. Then have them actually solve the problem.

This is just a sketch of a possible activity, but it is enough to give you an idea of what might be possible.

The second implication for higher education is that reasoning models make AI more transparent. Yes, we still don’t know what’s really going on inside the models, but being able to see the model’s thinking process gives us some insight into how the model operates. It’s a small step in the right direction.

At a higher level, this is yet another example of how rapidly the AI landscape is changing. The turbulent nature of AI isn’t going to change any time soon. Remember, you do not need to adopt every new model or tool the instant it comes along. It’s OK to take some time to figure out how new developments might impact you. Stressing over the onslaught of developments is counterproductive, especially given the current state of AI hype coupled with the fact that some tools will disappear as quickly as they came into view. Of course, I’m here to help. Just be sure to stay subscribed.

Want to continue this conversation? I'd love to hear your thoughts on how you're using AI to develop critical thinking skills in your courses. Drop me a line at Craig@AIGoesToCollege.com. Be sure to check out the AI Goes to College podcast, which I co-host with Dr. Robert E. Crossler. It's available at https://www.aigoestocollege.com/follow.

Looking for practical guidance on AI in higher education? I offer engaging workshops and talks—both remotely and in person—on using AI to enhance learning while preserving academic integrity. Email me to discuss bringing these insights to your institution, or feel free to share my contact information with your professional development team.