Google's Gemini, Perplexity.ai, and a little experiment succeeds (mostly)

AI Goes to College - Issue #2

Welcome to the Issue #2 of AI Goes to College! The newsletter is devoted to helping higher education professionals navigate the world of generative artificial intelligence (GAI) so that they can use it ethically and effectively.

This week's issue includes:

A rant about misleading headlines

Google releases a new model and rebrands Bard to Gemini

A brief review of Perplexity.ai, an intriguing GAI tool that seems tailor made for higher ed (even though it isn't), and

Some promising results from an experiment in assignment redesign

The highlights of this newsletter are available as a podcast, which is also called AI Goes to College. You can subscribe to the newsletter and the podcast at https://www.aigoestocollege.com/. You can also subscribe through Substack:

Rant of the week

Cengage, the course material publisher, recently released its 2023 Digital Learning Pulse Survey (https://info.cengage.com/LP=5443). As far as I can tell, the survey gathered data about AI for the first time. The results are interesting, especially since only 23% of faculty at four-year schools thought their institutions were prepared for AI-related changes; only 16% of faculty at two-year schools thought their institutions were prepared. 41% of faculty across the two types of institutions thought that GAI would bring considerable or massive amounts of change to their institutions.

Here are the specific GAI-related questions:

Do you think your institution is prepared for AI-related changes?

How much will AI tools change your institution over the next five years?

I'm not entirely sure what to make of this survey. The questions are kind of vague, which is typical for these sorts of surveys, but I suppose that we can say that relatively few faculty feel prepared for the likely changes AI will bring. This should concern those of us in higher ed, but it shouldn't come as a surprise. Who knows what changes AI will bring in the next five years? I don't. If you do, you should get into consulting right away!

This leads me to my main point: We're going to see a lot of these sorts of surveys, and many will lead to click-bait headlines, many of which will be misleading. The headline of the article that took me to the survey was "Study Reveals Only 16% of Faculty is Ready for GenAI in Higher Education." The problem with these sorts of headlines is that they can paint misleading pictures. The headline above is a good example. Not only did they include only the lower number (16% of two-year faculty rather than 23% of four-year faculty), the question didn't ask whether faculty thought they were ready, it asked if they thought their institutions were ready. These are VERY different issues. Even worse (maybe), the survey questions didn't ask about generative AI; they asked about AI. Maybe those mean the same to some people, but there's a lot of AI that's not generative AI.

As the AI/GAI hype continues, we're going to be exposed to a ton of similar studies and sensationalistic headlines. Be skeptical, my friends.

Despite my concerns about the survey and the article, I do think that collectively, we're not especially well prepared for the changes GAI (and AI in general) will bring to higher education. As I said, I don't know what those changes will be, but I'm pretty confident in saying that they won't be trivial.

AI in higher ed news

Google Gemini

Google recently launched a major update to its generative AI tool and rebranded its GAI efforts from Bard to Gemini (https://gemini.google.com/). At the moment, there are two versions, Gemini and Gemini Advanced, which are similar to ChatGPT and ChatGPT Pro. The nomenclature is confusing (at least it is to me). Gemini is a family of models: Ultra (the big dog, high-performance model), Pro, and Nano (a light version optimized for efficiency). Since I was confused, I asked Gemini Advanced to explain. I'll repeat its response here, verbatim.

Ultra, Pro, and Nano: Different Tools for Different Jobs

Think about it like a toolbox:

Gemini Ultra: This is your super-sized, power tool. Ultra handles the most complex, demanding tasks, and needs a ton of computing power to run. Think tasks like scientific research assistance or generating detailed concept art. It's powerful, but not meant for your everyday toolbox.

Gemini Pro: This is your versatile, go-to tool. It can handle a wide range of things well – writing essays, coding, brainstorming. It's a great overall model for many projects.

Gemini Nano: This is your pocket tool. It's fast, efficient, and designed to work on devices with less power, like your phone. Nano is best for quick tasks or situations where internet connectivity is an issue.

Why does this matter?

AI isn't one-size-fits-all. By tailoring their Gemini models, Google makes sure they can:

Deliver powerful AI in everyday devices

Offer solutions for huge computing-intensive tasks without always needing massive data centers

Adjust the "intensity" of the AI to match the user's needs, saving time and resources

Now it's starting to make some sense. Here's my understanding:

Gemini Advanced uses some version of Ultra. (There's a smaller, less resource intense version of Ultra specifically intended for Gemini Advanced.)

Gemini uses Pro for most tasks, but it might also use Nano for some tasks. Gemini will also use a mix of models, depending on the task.

Yeah, I know. This is kind of confusing. At the end of the day, though, I'm not sure the inner workings matter for most of us. Like GPT 3.5, Gemini is probably fine for most tasks. If Gemini isn't up to the task, try Advanced.

So far, I've been pretty happy with my used of Gemini Advanced. It did a good job of helping me unravel the various names and models. I've also played with it for some course related tasks and its performed well. Based on the reviews I read, some users are happy with Gemini and Gemini Advanced and some aren't. No surprise there. As they say, your mileage (kilometerage?) may vary. Try it for yourself and see how it performs for you. I'd love to hear your impressions of Gemini (or anything else in the GAI world). You can email me at craig@EthcialAIUse.com.

Yet another GAI in education survey

A higher ed think tank (whatever that means), HEPI, released a policy note that included some results from a survey of 1,250 undergraduate students in the UK. According to the survey, 53% of students used GAI to "help with their studies," but only 5% said they were likely to use AI to cheat. I don't doubt that these statistics accurately reflect the survey responses, but I'm skeptical about both of these numbers. 53% seems high, and 5% seems very low, but I'm just guessing.

In my conversations with students and faculty, the update of GAI among students is lower than some might think, especially if we consider regular use. 53% might have tried GAI, but I don't think that many use it regularly. But I really don't know.

To me, the more important part of the article discusses the digital divide problem. The digital divide is real, and it has real consequences for many aspects of society, including higher education. We need to keep chipping away at the digital divide if we truly want a just society.

The article provides some other interesting findings. For example, the survey found that 66% of students think it's OK to use GAIn to explain concepts, but only 3% consider using AI text in assessments without editing to be acceptable. Check out the article for more results: https://www.diverseeducation.com/reports-data/article/15663833/study-reveals-only-16-of-faculty-is-ready-for-genai-in-higher-education.

Resource of the week

There's been a lot of online chatter about Perplexity.ai (https://www.perplexity.ai/) lately. The gist of the chatter is that Perplexity is becoming a go-to GAI tool when you need to uncover sources. It's kind of a search engine on steroids.

Perplexity's "About" page says this: Perplexity was founded on the belief that searching for information should be a straightforward, efficient experience, free from the influence of advertising-driven models. We exist because there’s a clear demand for a platform that cuts through the noise of information overload, delivering precise, user-focused answers in an era where time is a premium.

OK. I'm not exactly sure what that means. Their "Overview" page (https://blog.perplexity.ai/getting-started#what-is-perplexity) is more informative (maybe). On that page, Perplexity is called "... your AI-powered Swiss Army Knife for information discovery and curiosity. It's not about answering questions, it's about empowering you to do more -- whether you're looking to summarize content, explore new topics, or even get a little creative." The Overview goes on to list some use cases: answering questions, exploring topics in depth, organizing your library, and interacting with your data. I can attest that Perplexity is pretty good for the first three, but I haven't tried it with my own files yet.

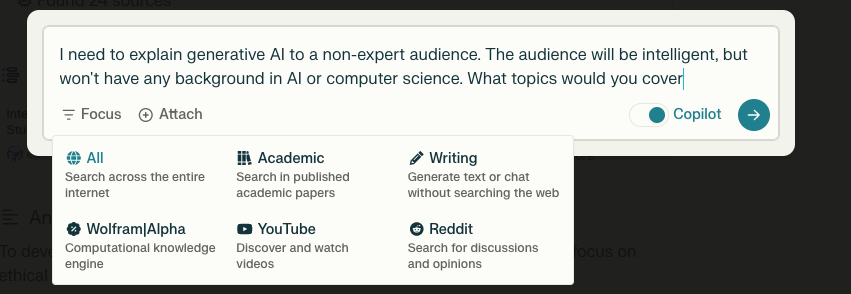

Two things set Perplexity apart for me. First, Perplexity's Copilot gives you a "guided search experience." This can be incredibly helpful. Basically, you can give Perplexity a typical prompt, and Copilot will ask you clarifying questions. But there's a very cool, kind of hidden feature with Copilot. You can restrict how Perplexity carries out its search. Check this out:

You can go broad (All) or narrow (e.g., published academic papers or Reddit). You can even discover useful videos.

Let's get back to the clarifying questions. When I entered the prompt above, Copilot came back with this:

Wow! That seems very useful. I especially like that you can bypass this step and just get your answer. By the way, you can choose more than one of the options (e.g., basic understanding and applications).

Copilot will even show you the steps it took in generating its response (kind of). It tries to understand your question, then asks clarifying question, and reads your response. Then, it searches and generates its response.

I'm not going to paste the entire response here, but here's a screenshot of part of it. There are some interesting things to notice. First, it lists its sources. This is massively helpful when you're working on something important. It's easy to examine the underlying source to either get more detail or to check its quality. Second, Copilot shows you which sources it used for each part of its answer. For example, it pulled from the techtarget and google sources for "What is Generative AI?" Again, very useful stuff. Finally, you can ask follow-up questions, which is nice.

Perplexity's Collection feature is also very useful. Basically, it allows you to group related threads (Perplexity's word for conversations).

One of my biggest frustrations with ChatGPT is trying to go back and find conversations, especially when I've been working on something over several sessions. Collections solves this problem. Collections can also be shared, which can be useful. You can also give a prompt that is applied to every thread in the collection, which is another useful feature. You can also share Collections. I haven't tried this yet, but can see its benefits for bigger projects.

All in all, Perplexity.ai is a useful tool in your GAI toolbox. I was so impressed that I paid for a yearly pro subscription pretty quickly. There are desktop, iPhone, and Android apps, but I haven't tried those yet. So do yourself a favor and check out Perplexity.ai. It occupies a distinct position in the GAI landscape and it's worth considering for anyone in higher ed.

Tip - An experiment in getting students to think

My Principles of Information Systems class includes some pre-class online assignments. These are simple little activities designed to get students to think about the material before class. They're easy, and I'm very lenient in the grading. Recently, I noticed that some submissions looked like they were generated with generative AI. To be clear, I didn't THINK they used GAI; I KNOW they were. I let it slide the first time and just mentioned my suspicions in class. The second time, I required students to resubmit, instructing them to put the answer in their own words.

I'm teaching the same class in the spring term, so I started thinking about how to modify these assignments to keep students from just copying and pasting a GAI response. The prospect of numerous academic honesty reports does not sound fun to me, so I decided that I needed to modify the assignments so that they have to think about the material ... at least a little. So, I tried a little experiment. I decided to short-circuit the inappropriate use of GAI. My idea was that I would give students a GAI-generated answer and ask them to reconcile this with information from the textbook. Here's the original assignment. As I mentioned, it's pretty simple:

Compare and contrast supply chain management systems and customer relationship management systems. Give three ways they're similar and three ways they're different.

I really like compare-and-contrast questions and I've used this one or something similar for a long time, with good results. But I'm not so sure that this is a good assignment in a GAI world. So, I modified the assignment to this:

Instructions

I'm going to give you a task and then tell you the answer given by generative AI. Then you will compare that answer to the information in the textbook and briefly describe how the information from generative AI and the textbook are 1) similar, and 2) different.

Task

You want to compare and contrast SCM and CRM systems by naming three ways they are similar and three ways in which they are different.

Here's what generative AI came up with.

Similarities:

Data Utilization: Both SCM and CRM systems rely heavily on data analysis and utilization. They collect, store, and analyze data to make informed decisions. SCM uses data to optimize supply chain operations, while CRM uses it to enhance customer relationships.

Enhanced Efficiency: Both systems aim to improve efficiency in different aspects of a business. SCM focuses on streamlining supply chain processes to reduce costs and improve product availability. CRM, on the other hand, aims to enhance customer interactions, leading to better customer satisfaction and retention.

Integration Potential: Both SCM and CRM systems can be integrated with other business systems. They often work in tandem with Enterprise Resource Planning (ERP) systems to ensure seamless data flow between various departments, such as finance, sales, and operations.

Differences:

Focus and Objectives:

SCM primarily focuses on optimizing supply chain processes, including procurement, manufacturing, and distribution, to ensure the efficient flow of goods and minimize costs.

CRM, in contrast, is customer-centric and aims to improve customer interactions, manage leads, track sales, and enhance customer service to build lasting relationships.

Primary Users:

SCM systems are typically used by logistics and operations teams, supply chain managers, and procurement professionals who deal with inventory management and logistics.

CRM systems are more commonly used by sales and marketing teams, customer support, and service representatives who interact directly with customers to improve their experience.

Key Metrics and Outcomes:

In SCM, key metrics and outcomes revolve around supply chain efficiency, including metrics like inventory turnover, on-time delivery, and lead time reduction.

In CRM, the focus is on customer-centric metrics, such as customer satisfaction, customer retention rates, conversion rates, and lifetime customer value.

In summary, while SCM and CRM systems share some similarities in terms of data utilization, efficiency enhancement, and integration potential, they differ significantly in their primary focus, user base, and key metrics. SCM focuses on optimizing supply chain processes, while CRM centers around improving customer relationships and interactions. Both are essential for the overall success of a business, but they address different aspects of operations.

How does this answer compare to what is in the textbook? Cite specific information from the text to support your answer.

Overall, I was happy with the results. Most students seem to have put a little thought into their responses. Some even went so far as to cite specific page numbers from the text. I'm confident that they increased their understanding of these systems, although I don't have hard evidence of this.

Here's why I like this approach:

It seems to be effective. Students have to give some thought to their answers, including doing some higher-level thinking.

Students can't just copy and paste a GAI-generated answer. Since GAI doesn't have access to the textbook, the students have to actually do a little work to the benefit of their learning.

Students may learn to be at least a little skeptical of GAI's output. There's nothing really wrong with the GAI output (it's actually pretty good), but there are some additional things that could be discussed.

The modification was low effort on my part. Going from concept to final assignment took maybe 5 or 10 minutes. That's not bad, given the benefits of this approach.

The approach can be replicated. Applying this approach to other topics shouldn't be hard. I like a method I can apply repeatedly, and this seems to be one.

As I said, I'm pretty happy with the outcome of my little experiment. Here's the thing: we're treading new ground, so we'll have to be willing to experiment and try new things. Some will work, and some won't. This is where faculty need help from administrators. Experiments sometimes fail, even those that are well thought out. If administrators punish faculty who try new things, faculty will stop trying and our students will suffer.