“Game changer” is one of those terms that’s thrown around much too readily. Often a “game changing” technology turns out to be incremental at best. But Google’s Gemini Advanced 1.5 Pro with Deep Research is a rare AI tool that might deserve the label.

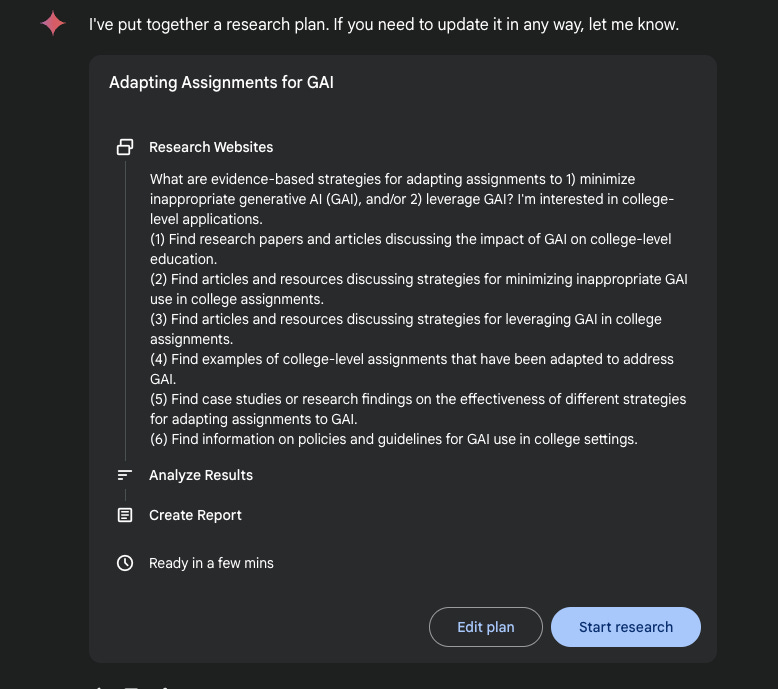

Google describes Deep Research as “Your personal AI research assistant.” It’s similar to Perplexity in that it does some searching (research) before formulating a response to your prompt. But Deep Research goes further. Before answering, it creates a multi-step research plan, which you can approve or modify. I’ll walk you through my experience using Deep Research to investigate AI-aware assignment design.

I wanted to do some research into how to modify assignments to not only minimize inappropriate AI use, but also to leverage AI.

Before entering a prompt, you need to select 1.5 Pro with Deep Research as the model to use. Once you do so, just enter your prompt as normal.

Here’s my prompt.

What are evidence-based strategies for adapting assignments to 1) minimize inappropriate generative AI (GAI), and/or 2) leverage GAI? I'm interested in college-level applications.

Gemini will ponder a bit, then return its research plan.

You can edit the plan if necessary; just click on “Edit plan.”

I didn’t really want to modify the plan, so I told Gemini it was fine. Then Gemini went to work. It shows you its progress as it goes along. By the way, you can ask Deep Research to limit its research to certain types of sources, like peer-reviewed articles.

Depending on the complexity of the research task, Gemini can take several minutes. My tests have indicated that it generally takes three to five minutes. You can move on to other things while you wait.

Here’s what Google has to say about the research process:

Over the course of a few minutes, Gemini continuously refines its analysis, browsing the web the way you do: searching, finding interesting pieces of information and then starting a new search based on what it’s learned. It repeats this process multiple times and, once complete, generates a comprehensive report of the key findings, which you can export into a Google Doc. It’s neatly organized with links to the original sources, connecting you to relevant websites and businesses or organizations you might not have found otherwise so you can easily dive deeper to learn more. If you have follow up questions for Gemini or want to refine the report, just ask! That’s hours of research at your fingertips in just minutes.

Once the research is complete, Gemini gives you its report. Take a close look at the upper right corner of the screenshot below. See where it says, “Open in Docs?” That little button is pretty awesome. Click on it and (wait for it), the report becomes a Google Doc.

The report is nicely formatted, although for some reason it doesn’t make good use of styles. Because of the report’s formatting, copy/paste doesn’t work well. Open in Docs makes getting the report into a document simple. Once the Doc is created, you can edit or share it just as you would for any Google Doc. The end of the report has a complete list of references complete with hyperlinks, so it’s easy to check the original sources.

They’re kind of hard to see in the screenshot above, but the report includes little dropdown arrows that indicate citations. Clicking on an arrow shows the reference for that bit of text.

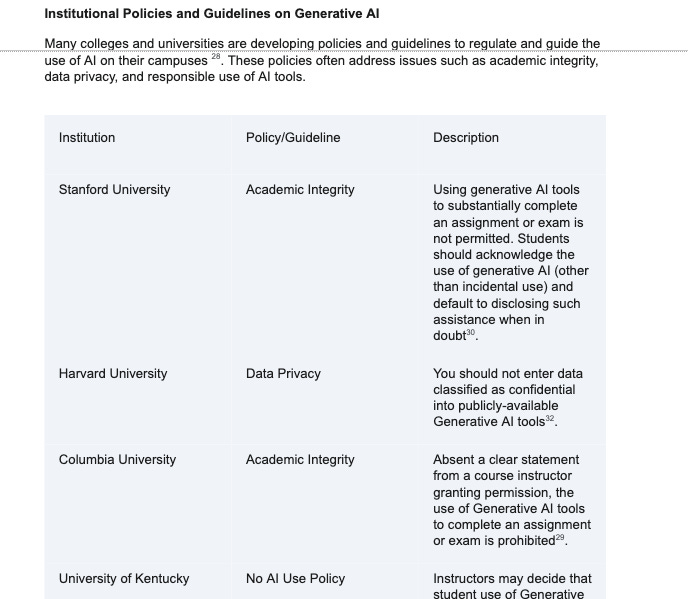

The report comes in quite well in terms of formatting. Even tables are imported correctly.

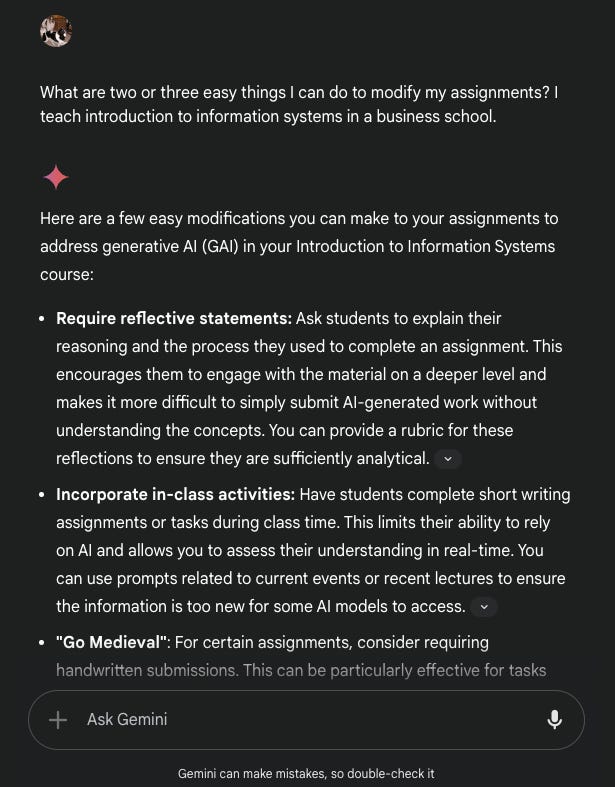

You can also continue to chat with Gemini. I think (but am not sure) that Gemini will continue to use the information found in the references. Gemini did cite specific sources for the response below, and these matched references in the report. I didn’t see a way to integrate the new response into the report, which would be a nice feature.

If you want to check out the full report, I’ve published it on the AI Goes to College Substack with a disclaimer that I didn’t write or check it.

Implications for higher education

What does Deep Research mean for higher ed? That’s a great question, one that deserves more thought than I’ve been able to give it so far. (Hey, I have a regular job too!). Rob Crossler and I will share some thoughts in an upcoming episode of the AI Goes to College podcast, so be sure to subscribe/follow.

My initial thought after testing Deep Research was “wow!” (OK, it was something more colorful.) Almost immediately thereafter, I thought that Deep Research is one more reason we need to rethink how we assess learning and assign grades. (Is it time to end grades? I wonder.)

For those on the administrative side, Deep Research could be a fantastic tool for getting a head start on reports and documents. It could also be great for quickly researching best practices, developing institutional policies, identifying new student markets, and dozens of other tasks.

I worry about students using Deep Research inappropriately. It’s easy to see students using Deep Research to create a document, then doing some minimal editing and claiming the work as their own. My bigger concern is that the line between appropriate and inappropriate use is increasingly blurry. How much editing makes something a student’s work? Should we just outright ban these tools (not that doing so would work)? My position on bans is clear … they’re wrongheaded and ultimately doomed to fail. Frankly, faculty have a lot of work to do to figure out how to react to the onslaught of increasingly capable AI tools. Burying our collective heads in the sand is a bad idea. So, I reiterate, we need to rethink how we evaluate learning. The old ways just won’t work anymore.

Bottom line

Here are my bottom line thoughts on Deep Research.

Tools like Deep Research will continue to emerge and increase in their capabilities.

Students ARE going to use Deep Research and similar tools, so we need to figure out how to adapt.

Although I wouldn’t trust Deep Research for serious academic research, it can help with less scholarly tasks and even for quickly coming up to speed in an unfamiliar area.

My closing thought is a bit of a non sequitur. OpenAI and other AI vendors need to watch out. Google got off to a slow start with generative AI, but their technical and financial might allowed them to not only catch up with the field, but to overtake it in some ways. Grab your popcorn, this is going to be interesting.

Well, that’s all for this time. If you have any questions or comments, you can leave them below, or email me - craig@AIGoesToCollege.com. I’d love to hear from you. Be sure to check out the AI Goes to College podcast, which I co-host with Dr. Robert E. Crossler. It’s available at https://www.aigoestocollege.com/follow.

Thanks for reading!