ChatHub - A Useful Chrome Extension for Comparing Chatbots

Do you ever compare prompt outputs across multiple AI models? If so, you might want to check out ChatHub ([https://chathub.gg/](https://chathub.gg/)). ChatHub lets you simultaneously submit a prompt to as many as six different large language models. For example, you can run the same prompt through ChatGPT, Claude, and Gemini and see the results side-by-side. Check out the example below, which is based on this prompt: “What are the advantages of comparing output from a prompt across several different LLMs?”

In this example, I ran the prompt through ChatGPT and Claude. I’m not sure what ChatGPT model is being used, but I suspect it’s either 3.5 or 4o. You can also use Gemini, Bing Copilot, Perplexity (which is awesome), Llama 3 and several others. (Both responses are available at the end of this article if you’re interested in seeing them.)

The comparison is interesting. Both chatbots started with the same benefit (diversity of perspectives) but quickly took different paths, although there are several common responses. Seeing the two outputs side-by-side makes the differences easy to spot, which can be useful at times. But I think the main benefit may be to get more comprehensive responses, which allows you to combine responses, pick-and-choose elements, etc.

Prompt Library

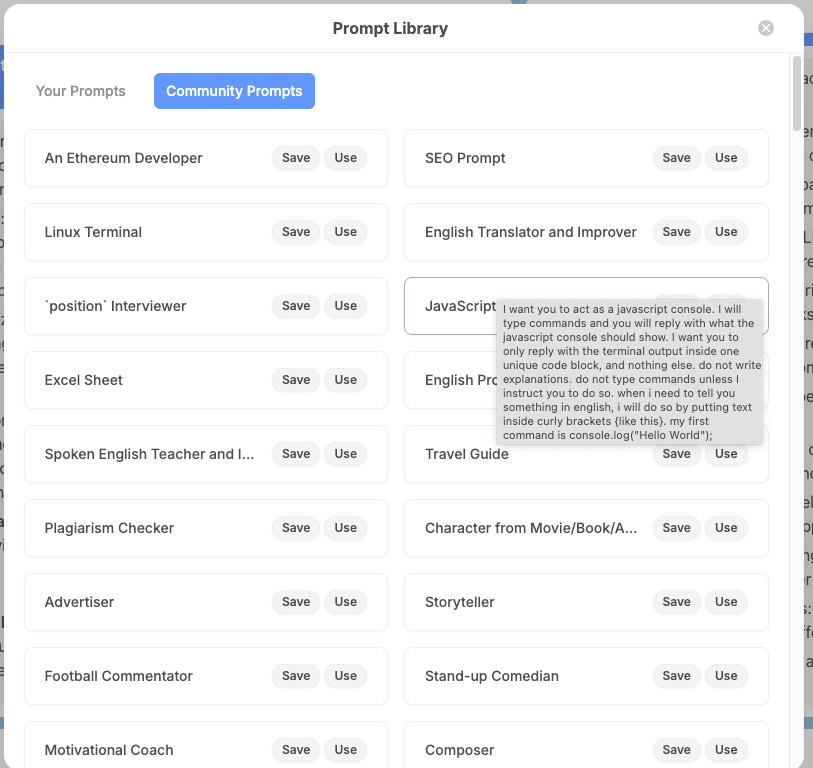

There’s a kind of hidden feature of ChatHub that may lead me to use it quite a bit. You can build a prompt library by clicking on the pencil icon (I think it’s a pencil) in the prompt section. The icon is circled in red in the screen shot.

Over time, you can build up a library of frequently-used prompts, which seems like a huge time saver. Sure, you can do the same thing with a notes app, but this seems more convenient once you get your library built up. There are also community prompts, which could be very useful. You can see a few in the screenshot below. There’s not any vetting of the prompts that I can see and some of them aren’t very good, but you might find something worthwhile. The quality was pretty inconsistent among the community prompts I checked out, but it’s pretty easy to ignore the bad ones.

ChatHub only runs on chromium based browsers such as Chrome, Edge, and Brave. There’s a free tier that allows simultaneous chats with two chatbots. They also have three tiers of one-time payments starting at $39 and a monthly service at $19 per month ($15 if you pay annually). You can see some of the differences in the screenshot below. The big benefit of the monthly plan is that you don’t need separate accounts with the various chatbots. So, if you’re paying for several premium accounts you might save some money by going the monthly route with ChatHub. I wouldn’t do that until you’re sure you like using ChatHub though. The free version is pretty good as long as you have existing chatbot accounts, so play around with ChatHub before putting your money on the line. I’m happy enough that I’m going to purchase one of the one-time payment accounts. As I mentioned, there are three tiers of these ($39, $59, and $69). The $39 plan only lets you activate one browser while the more expensive plans allow three and five browsers to be activated respectively. You can switch browsers on the $39 plan but you have to deactivate the membership on the old browser and reactivate it on the new one, which sounds like a bit of a pain. I’m probably going to spring for the $69 plan since I want to be able to use ChatHub at home, at work, and from my laptop.

So, should you use ChatHub? Well, that’s hard to say. If you always stick to one chatbot, probably not. But if you use multiple chatbots I think ChatHub is worth checking out. The free tier will give you a pretty good idea of whether or not it will be useful. For those of you who are helping others learn generative AI, I don’t think I’d introduce brand new users to ChatHub. It’s probably better to help them learn one tool first before getting into model comparisons.

If you decide to check out ChatHub, let me know what you think by either using the “contact” button at aigoestocollege.com or by emailing me at craig@AIGoesToCollege.com.

Footnote:

This article is dedicated to my colleague Ajaan, who loves to compare chatbot outputs so much we turned his name into a verb. Instead of asking whether someone compared output, we now just ask, “Did you Ajaan that?”

Responses:

ChatGPT

Comparing outputs from a prompt across several different Large Language Models (LLMs) can provide a range of advantages, especially for those engaged in fields like education, research, or technology development. Here are some key benefits:

1. **Diversity of Perspectives**: Different LLMs may have been trained on diverse datasets, with varying algorithms and objectives. This can lead to different interpretations or answers to the same question, offering a broader perspective and fostering a more comprehensive understanding of the subject matter.

2. **Error Reduction**: By analyzing the outputs of multiple models, one can cross-verify information, reducing the likelihood of relying on erroneous data. This is particularly useful in academic and professional settings where accuracy is crucial.

3. **Model Benchmarking**: Comparing outputs helps in assessing the strengths and weaknesses of different models. This can guide users in choosing the most appropriate model for specific tasks or in understanding how different models perform under various conditions.

4. **Insights into Model Bias and Training**: Seeing how different models respond to the same prompt can provide insights into the biases inherent in their training data or algorithms. This is important for critical applications where bias needs to be minimized.

5. **Enhanced Creativity and Innovation**: In creative and problem-solving contexts, different outputs can stimulate new ideas and approaches. This can be particularly beneficial in fields like marketing, product development, or content creation.

6. **Improved Reliability and Robustness**: By evaluating how various models handle the same prompts, developers and researchers can better understand the robustness of these models. This is crucial for developing systems that are reliable and perform well in real-world applications.

7. **Educational Value**: For educators and learners, comparing these outputs can be an excellent educational tool to understand machine learning concepts, the nature of AI-generated content, and the critical evaluation of AI outputs.

Overall, the practice of comparing LLM outputs can not only enhance decision-making and insight generation but also help in advancing the field of AI by identifying areas for improvement in model development and application.

Claude 3

Comparing output from a prompt across several different large language models (LLMs) offers several advantages:

1. Diversity of perspectives: Different LLMs may approach the same prompt in varied ways, providing a broader range of ideas and insights.

2. Identification of biases: By comparing outputs, you can spot potential biases or limitations specific to individual models.

3. Consistency check: If multiple LLMs provide similar answers, it can increase confidence in the information's reliability.

4. Performance evaluation: Comparing outputs allows you to assess which LLM performs better for specific tasks or domains.

5. Complementary strengths: Different models may excel in various aspects, allowing you to leverage their combined strengths.

6. Error detection: Discrepancies between model outputs can help identify potential errors or inaccuracies.

7. Robustness testing: Seeing how different models handle the same prompt can reveal the stability and consistency of LLM responses.

8. Task suitability assessment: It helps determine which LLM is most appropriate for particular types of prompts or applications.

9. Inspiration for prompt engineering: Observing varied responses can inspire ideas for refining prompts to get better results.

10. Understanding model limitations: Comparing outputs highlights the current capabilities and limitations of different LLMs.

Would you like me to elaborate on any of these points or discuss additional aspects of cross-LLM comparison?