ChatGPT memory, multi-model conversations, and working with part of a conversation

AI Goes to College No. 8

In this issue:

AI Goes to College YouTube channel

ChatGPT Memory now available to all users

Poe - Multi-Model Conversations

ChatGPT Reply and Gemini Modify Text - query parts of a conversation

News

AI Goes to College YouTube channel

AI Goes to College is on YouTube! In addition to episodes of the ;AI Goes to College podcast, I'll post short, "low-fi" videos showing different things I'm doing with generative AI. (Low-fi means minimally edited, mostly one-take videos.) When available, I'll also post videos of talks I've given. For example, the channel includes my talk on the ethics of human-AI coproduction. You can check it out by searching for @AIGoestoCollege on YouTube or https://youtube.com/@AIGoestoCollege?si=YQSo5ix_D9LalEZF. Check it out and don't forget to like, subscribe, etc., etc. Seriously, I'd love for you to check it out and leave a comment. (Apparently, comments feed the algorithm to help spread the word.) Thanks!

ChatGPT Memory now available to all users

A few months ago, OpenAI started testing the ability for ChatGPT to remember things about your conversations to "make future chats more helpful." That feature in now live for users in North America, but not for those in Korea or Europe. Oddly, it's not available for Team or Enterprise users.

According to OpenAI, ChatGPT can remember things across chat sessions, which should improve the quality of responses. Memory is kind of like custom instructions, but you won't have to remember to add important elements to custom instructions; ChatGPT should remember them from chat to chat.

My experience with custom instructions has been mixed. Some aspects of my custom instructions seem to work pretty well, but others do not. For example, I have a custom instruction that says "Do not rewrite anything unless I specifically ask you to." Maybe "anything" is too vague, but ChatGPT almost always rewrites portions of text I give it when I'm asking for a critique. This isn't a big deal since I just ignore the rewrites, but it makes me wonder about custom instructions and (now) memory.

Users can manage memory through Personalization in Settings, which also lets you turn off memory. The Manage button should also show you the preferences and details ChatGPT keeps in its memory. At the moment, this section is blank for me; I'm not sure what that means. Hopefully, this will start to populate soon. We'll see.

It's too early to tell if the memory feature really will improve responses. As I've written before, time will tell.

If you want to learn more about ChatGPT's memory, check out OpenAI's FAQ here: https://help.openai.com/en/articles/8590148-memory-faq

Let me know if you see any performance improvements now that memory is live for most users. Email me at craig@AIGoesToCollege.com or leave a comment.

Tool updates

Poe - Multi-Model Conversations

Poe.com, which is one of my favorite AI tools, recently rolled out the ability to use multiple models within a single conversation. This is a pretty clever move by Poe, since being able to use multiple models is their competitive advantage. (By the way, "model" refers to the large language model that serves as the engine for a generative AI tool. For example, GPT-4 is the model that drives ChatGPT-4.)

There are a couple of ways you might use this. The first is to compare the responses of different models. The second is to switch from one model to another to tap into the strengths of each. Let's run through an example of each use.

Comparing responses

Recently, I was working on a panel proposal with Claude's help. I to write an abstract for the proposal, which I did and then asked Claude 3 Opus to critique what I'd written. Here's part of Claude's response. (I fed Claude the proposal and abstract.)

Here's the last part of Claude's response, along with some suggestions for extending the conversation. Below those suggestions are three "Compare" options that will let you regenerate your last query using one of these three models. I'm not sure how Claude chooses these models, by the way. You can also continue the conversation with Claude by using the text window at the bottom. Let's see what GPT-4 has to say.

Here's GPT-4's response.

The two responses are quite different in my view, although both sets of recommendations are reasonable. There are aspects of both that I like, so it's nice to be able to generate multiple responses quickly. Notice that if we continued this conversation, we'd be using GPT-4. (You can tell by the @GPT-4 note in the window for the next query.) Replacing @GPT-4 with @Claude-3-Opus continues the conversation the Claude. Overall, I think this pretty useful but I don't know that I'd use it frequently. But, when you want different perspectives on something, this capability makes doing so MUCH easier.

Multi-model conversations

The second use is to carry out conversations across multiple models. This could be massively useful. Models vary in their abilities. For example, some people think that GPT-4 is better at developing ideas than Claude-3 Opus, but that Claude is better at writing. (I don't necessarily agree, by the way.)

I'm going to use an example from my philosophy podcast, Live Well and Flourish (https://www.livewellandflourish.com/). Here's the initial prompt and the first part of GPT-4's response.

GPT-4 and I had a few rounds of back-and-forth to develop the idea and refine the outline. Then it was time to write the script. Please note, however, that I do not use AI scripts for the podcast. Crafting the script is part of the enjoyment I get from producing Live Well and Flourish, so I always write the script myself. Sometimes, though, I get AI to write a draft just as a guide for writing my own. Anyway, I switched to Claude-3-Opus for the writing. I find it better to ask AI to write longer documents in sections, so that's what I did, as you can see below. @Claude-3-Opus is what caused Poe to use Claude instead of GPT.

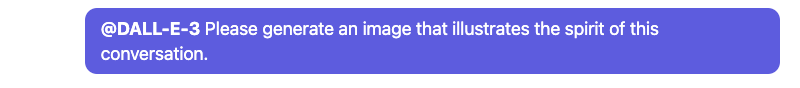

Then, just for fun, I switched to DALL-E and asked for an image that illustrated the spirit of the conversation.

The image DALL-E generated was ... odd and a little disturbing. I’m not going to show it here because I won’t want to give you strange dreams. Seriously, it was odd.

Overall, this is a great new feature, one that seems to be unique to Poe (at least in an easily accessible form). As I've mentioned before, I'm a big fan of Poe.com and multi-model conversations just strengthens my fandom.

ChatGPT Reply and Gemini Modify Text: Query or rewrite parts of a conversation

A few months ago, a useful new feature popped up on ChatGPT. I think it's official name is "reply", but the details are a bit scarce. Its genesis seems to be a feature request, which you can view here: https://community.openai.com/t/add-a-reply-button-to-chatgpt/549996.

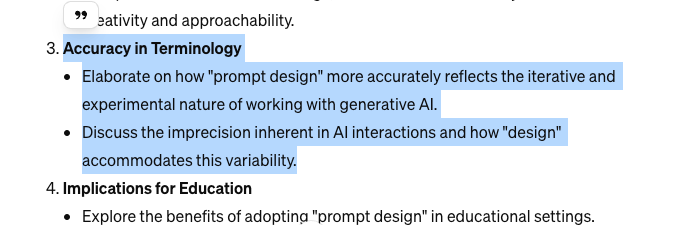

Regardless of its origin, reply is pretty useful. The feature is pretty simple. You highlight a portion of a ChatGPT response and double-quote appears as shown below. (This example is part of a chat I had about the downsides of the term "prompt engineering." I'm advocating for a shift to "prompt design.")

ChatGPT uses the highlighted portion as input into the next prompt:

Suppose you've asked ChatGPT to draft some exam questions. One of them needs more depth. All you need to do is highlight that question and ask for more details. Or maybe you're using ChatGPT to brainstorm some ideas and you really like one of them. Just highlight that idea click on the quote mark and start developing that idea.

Is this a groundbreaking new capability? No, but it is oddly useful. I say "oddly" because you could always just copy and paste the text into the chat window. Somehow, though, using Reply seems much easier, especially for longer chunks of text. Give it a try and see what you think.

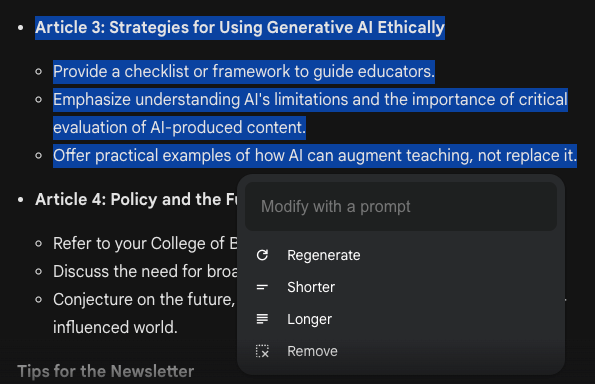

Gemini has an interesting similar feature. (I'm not sure what it's called.) When you highlight part of a response, you get a little pencil icon and the text "Modify selected text."

If you click on the pencil, you get four options, regenerate, shorter, longer, and remove. You can also modify the text using a prompt.

Let's explore the modify with a prompt feature. Gemini's original response mentioned a checklist. So, I asked it for the key elements of the checklist.

The result is interesting and not what I wanted, but this was my fault. Gemini replaced the highlighted portion with the new response. If you compare the screenshot below with the prior screenshot, you'll see that Gemini dropped two parts of Article 3. This happened because I had all of that text highlighted; basically Gemini replaced what I had highlighted with the new response. Fortunately, there's an undo button, which I used.

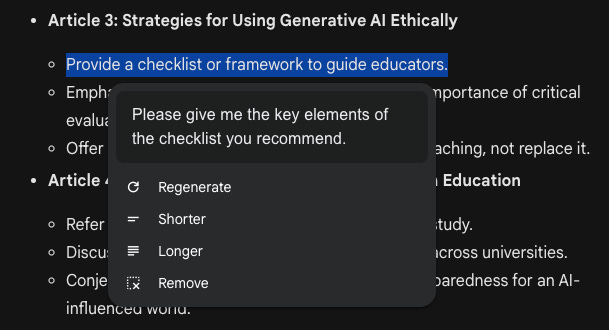

Let's try this again, this time highlighting only the first bullet point.

Success! This time Gemini retained the un-highlighted portions and just modified what was highlighted. It's interesting that the response is somewhat different; that's the nature of large language models though. Put in the same prompt twice and you'll get slightly different responses.

These two features have some overlap, but they really serve different purposes. ChatGPT's will allow you to rewrite part of a response, but it's really more for focusing on a specific part of a response. Gemini's is mostly directed at rewriting, which is why it replaces the highlighted text. Which is better? Well, it depends on what you're doing. I find ChatGPT's more flexible, but both have their place.

Well, that’s all for this time. If you have any questions or comments, you can leave them below, or email me - craig@AIGoesToCollege.com. I’d love to hear from you. Thanks for reading!