AI Goes to College No. 3: Detecting fake papers, Zoom meeting magic, and Gemini is pretty awesome

Helping higher education professionals navigate generative AI

Welcome to Issue #3 of AI Goes to College!

This week's issue includes:

Insights on how to recognize AI-written text

My impressions of Google's Gemini (spoiler: I like it)

An overview of an awesome Zoom feature that most people don't know about

A new memory management feature coming to ChatGPT, and

OpenAI's scary-good video generator

Sorry, no rant this week.

The highlights of this newsletter are available as a podcast, which is also called AI Goes to College. You can subscribe to the newsletter and the podcast at

https://www.aigoestocollege.com/. The newsletter is also available on Substack.

Rant Tip of the week

If you give any at-home assignments in your courses, you've probably received responses that were written by generative AI (GAI). If you haven't, you will soon. That's just the way of things in a GAI world. AI detectors do not work; they simply aren't reliable in their current state. (Some seem to be getting better though.) So, what can you do?

The best approach, in my opinion (and that of many others) is to modify your assignments to make it harder for students to use GAI. I'll write in some detail about that later. Getting smarter about sniffing out GAI-written text is also a good idea.

The first step I suggest is to run the assignments through one or two GAI tools. Do this for several assignments and you'll be able to see some common characteristics of GAI responses. Many students who use GAI inappropriately are lazy and they'll just copy and paste the answer into Word or the response section of your learning management system. They won't try very hard to mask their use of GAI, so understanding how ChatGPT and other tools format their responses is useful in detecting inappropriate use. However, you need to realize that you can give GAI the same prompt ten times and you'll get ten slightly different responses. That's just the way the systems work. But, usually the format and "feel" will be consistent.

GAI formatting

The first indicator of GAI use comes from how it often formats longer responses. Many GAI tools use something called markdown language to format more complex responses. Markdown is (ironically) a markup language that uses symbols to allow formatted text using a plain-text editor rather than a normal word processor. (I'm using markdown in Obsidian to write this article). Because GAI systems often use markdown, they tend to format text in certain ways.

GAI loves numbered or bulleted lists with boldface headings, often with details set off with a colon. Here's an example from one of my conversations with ChatGPT about how to recognize GAI text:

This example doesn't use colons, but the pattern is recognizable. The next example (from the same conversation) is a classic GAI numbered list:

So, whenever I see a response formatted like this, my GAI radar pings. Sure, it's entirely possible that a student would format their response the same way, but it's not likely that many would go through the trouble to do so.

The next "tell" is the complexity of the answer. In my class, the online assignments are pretty simple. They're just intended to get students to think about material prior to lectures or to reinforce something from a lecture or chapter.

Here's one of my assignments, which is designed to get students to think a bit about Web 2.0.

Reflect on how you used Web 2.0 technologies as schools transitioned to online learning during spring of 2020 as the COVID-10 pandemic occurred. What Web 2.0 technologies did you use? Which worked great and which were challenging? Why?

Prior to GAI, students would write a few lines about what they liked or didn't like about Zoom, Google Docs, and similar technologies. The answers were very much opinion-based, which was fine.

Here's the ChatGPT answer:

Video Conferencing Platforms:

Tools like Zoom, Google Meet, and Microsoft Teams became essential for conducting live virtual classes, facilitating real-time interactions between teachers and students.

These platforms allowed for synchronous communication, enabling teachers to deliver lectures, host discussions, and provide immediate feedback to students.

Learning Management Systems (LMS):

Platforms such as Google Classroom, Moodle, and Canvas served as centralized hubs for organizing course materials, assignments, and assessments.

LMS platforms facilitated asynchronous learning, allowing students to access resources, submit assignments, and engage in discussions at their own pace.

Collaborative Document Editing:

Tools like Google Docs, Microsoft Office Online, and Dropbox Paper enabled collaborative document editing and real-time collaboration among students on group projects and assignments.

These platforms promoted teamwork and fostered a sense of community among students despite physical separation.

Interactive Whiteboard Tools:

Platforms like Jamboard, Microsoft Whiteboard, and Explain Everything provided virtual whiteboard capabilities, allowing teachers to illustrate concepts, solve problems, and engage students in interactive lessons.

Interactive whiteboard tools enhanced the delivery of visual content and encouraged active participation during virtual classes.

While many Web 2.0 technologies proved to be effective in supporting online learning, some challenges were also encountered:

Technical Issues:

Connectivity issues, audio/video glitches, and platform outages occasionally disrupted virtual classes, impacting the continuity of instruction.

Inadequate hardware and internet access among students further exacerbated these challenges, leading to disparities in access to online learning resources.

Digital Divide:

Socioeconomic disparities and inequities in access to technology became more pronounced during the transition to online learning.

Students from underserved communities faced challenges accessing reliable internet connections and suitable devices for remote learning, widening the digital divide.

Pedagogical Adaptation:

Adapting instructional strategies and assessments to an online format required teachers to rethink their teaching practices and leverage technology effectively.

Balancing synchronous and asynchronous activities, maintaining student engagement, and providing personalized support posed pedagogical challenges in the online learning environment.

Wow! This one is pretty easy to detect. First, it's hard to imagine a student putting this much effort into a small assignment. Second, there's too much complexity and detail in the answer. Look at how many of the tool categories mentioned multiple examples (Zoom, Meet, Teams). It's unlikely students would have included multiple examples in multiple parts of their answer.

The answer is also very impersonal. Nothing about "my school" or "I liked/hated" this tool or that tool. No dissing on teachers or schools. It's all very cold and factual.

Finally, the use of language like socioeconomic, continuity of instruction, and the like are huge indicators of GAI-generated text. Few students write or talk like this.

Of course, clever, industrious students can take what GAI produces and put it in their own words. In some cases, I'm kind of OK with this since they're still making connections and processing information. But in reality, the clever, industrious students aren't the ones most likely to use GAI to simply spit out assignment answers.

GAI writing style

GAI tools tend to have a distinct writing style that is very different from the way most students work. Here are some indicators of GAI-written text. I generated this list with the help of ChatGPT 4 and Gemini Advanced, coupled with my own experience.

GAI writing is often impersonal and mechanical while often being very clean with respect to spelling, punctuation, and grammar. There are also some words and phrases that GAI seems to use in abundance.

GAI LOVES transitional phrases. For some reason, ChatGPT seems to like "moreover"; I don't think I've ever heard a student say moreover. "Furthermore", "additionally", "in conclusion", and my personal favorite "henceforth" may also indicate GAI use, especially if the same phrases are used repetitively.

Here are some other GAI favorites:

thus

therefore

consequently

indeed

notably

nevertheless

nonetheless

GAI also tends to use a consistent set of idioms and clichés:

At the end of the day

Needless to say

All things considered (A great radio show, but not in most student's vernacular ... neither is "vernacular".)

The fact of the matter is

Of course, it's entirely possible that a particular student will write this way, but it's not likely. (Seriously, who writes this way?)

Other indicators

GAI is also prone to factual inaccuracies or inconsistencies with class material. One big tell for me is when student responses don't follow what we discussed in class or what's in the textbook. For example, in my world the system development life cycle (SDLC) is a big deal. But, there are dozens of flavors of the SDLC, often varying in how many phases it includes or the particular names used for each phase. They're all generally different versions of the same thing, but some experts and textbooks favor one version over the others. If a student's answer uses a version that's different from the one I present, I'm pretty sure something is up.

GAI answers often lack any contextual nuance. The answers are often kind of generic, especially in the hands of inexperienced users. This is one reason that contextualizing your assignments is a good way to adapt them for a GAI world.

GAI responses will also lack any personal insights or references to personal experiences. This is another good way to adapt your assignments by requiring students to relate material to their personal experience.

Many GAI tools have trouble with citations. I don't require citations or references in my assignments, so I don't have a lot of experience here. But, I do know that GAI will absolutely make up references. Most GAI tools will also fail to cite the most current resources, since they're trained on older data. Finally, GAI tends to provide fairly generic references rather than those that are more tailored to the particular essay.

At the end of the day (sorry, couldn't resist) I think of GAI detection as a collection of circumstantial evidence. No one indicator is enough to believe that a student used GAI, but when the indicators pile up, it's a pretty good bet they did. You have to decide how to respond, a gentle nudge or a formal accusation; that's up to you. But to respond you first have to detect GAI use. Hopefully, this little article will help.

What are your favorite GAI "tells?" Let me know at craig@EthicalAIUse.com or leave a comment below.

Resources of the week

Google’s Gemini gets better

Lately, I've found myself using Google's Gemini frequently. For example, I just gave a talk "The Perils and Promise of Generative AI" at the University of Louisiana System's "For Our Future" conference. I wanted to include some specific uses of generative AI for administrative tasks. Gemini was a huge help. It helped me brainstorm several useful examples, then helped me dial in out a couple that I decided to include in my talk. Although I didn't do a side-by-side comparison, Gemini's performance felt pretty similar to ChatGPT's. I used Gemini Advanced, which is kind of Google's equivalent to ChatGPT 4. Both are only available to paid users.

Gemini has some extensions that may prove quite useful. The extensions will let you do things like asking questions about Gmail messages and Google Drive documents. There's also YouTube extension that looks interesting. My initial testing yielded mixed results. The Gmail extension did a pretty good job of summarizing recent conversations. The YouTube extension failed at one task, but did better at another. I'll do a full review of the extensions in the future. Currently, there are extensions for:

Gmail

Google Docs

Google Drive

Google Flights

Google Hotels

Google Maps, and

YouTube

If you haven't tried Gemini, I think you should. It doesn't have all of the features of ChatGPT, but Google is gaining ground quickly.

Zoom's Meeting Summary to the Rescue

Last fall, video conferencing giant Zoom release their AI Companion, which provides in-meeting generative AI capabilities. I use it to summarize meetings and it is awesome. If you're like me, it's easy to get so caught up in a discussion that you forget to make notes; a few days later you have completely forgotten some of the details ... like what you're supposed to handle.

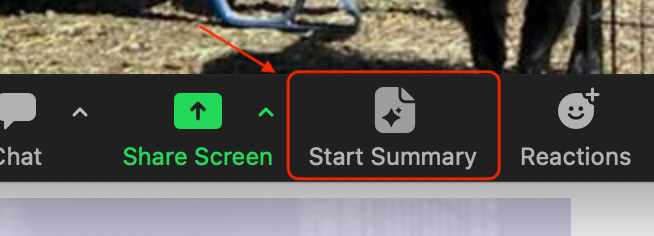

Zoom's AI Companion to the rescue! Just click on "Start Summary" (see below) and AI Companion does the rest. Shortly after the meeting, the host receives and email with the summary, which they can email to other attendees. The summaries are also available in your Zoom dashboard and they're easy to edit or share from there.

The summaries are surprisingly good. An example of the summary of a recent meeting is shown below. I redacted many details but this should give you an idea of how the summaries are structured. The summary accurately reflects what we discussed in the meeting. Everyone I've sent summaries to have basically responded with the equivalent of "Wow!".

Meeting Summary for Craig Van Slyke's Personal Meeting Room

Feb 22, 2024 06:27 AM Central Time (US and Canada) ID: 228 686 4757

Quick recap

X, Y, and Craig discussed a variety of topics related to research and academics. They shared their roles and interests, and explored the potential of digital transformation in education, particularly the use of AI. Craig suggested ...

Summary

Academic Roles and Challenges X, Y, and Craig discussed their roles and interests within the field of research and academics ...

Digital Transformation in Education: Exploring AI's Potential Craig proposed the idea of exploring the concept of ...

Exploring Digital Transformation and Telework Stress X, Craig, and Y discussed potential research avenues. Craig suggested ...

Shift to Local South African Survey Platforms Craig discussed the methods used to recruit participants for a survey, ...

Privacy, Surveillance, and Cybercivility in Online Education Craig proposed adapting ...

Qualtrics vs Cloud Research Costs Craig and X discussed the costs associated with using ...

Project Tasks and Ethics Committee Approval X, Y, and Craig discussed the next steps for their project. ...

Next steps

Craig will send X and Y the questionnaire items and the ethics submission ... X and Y will apply for ethical clearance ...

There are a couple of things to keep in mind. First, only the meeting host can start the summary. Second, AI Companion is only available to paid users. If you're using Zoom through your institution and are the host, and don't see the Summary button, contact your IT folks and see if AI Companion needs to be enabled.

There are some other features of AI Companion, but I haven't tried them yet. One particularly interesting capability is the ability to ask questions during the meeting. This could be very useful if someone joins late and wants to catch up. You can check out the other features here: https://www.zoom.com/en/ai-assistant/.

The next time you host a Zoom meeting, try the AI Companion summary. (But be sure to ask permission of the other attendees.) I think you'll be happy with the results. Let me know what you think: craig@EthicalAIUse.com.

AI in higher ed news

ChatGPT remembers

Soon (hopefully) ChatGPT will be able to remember things across conversations. You'll be able to ask ChatGPT to remember specific things or it will learn what it should remember on its own. According to OpenAI, ChatGPT's memory will improve over time.

Very few users have access to this new feature at the moment. OpenAI has promised to share plans for a full rollout "soon" (whatever that means). I don't have access yet, so what I write here is based on OpenAI's blog post announcing the feature (https://openai.com/blog/memory-and-new-controls-for-chatgpt).

Here are some examples of how memory might help:

In your conversations, you've told ChatGPT that you like a conversational tone in your emails. ChatGPT will craft future messages in this tone.

You explain that you teach management. When you brainstorm assignment ideas, ChatGPT will start by recommending management topics.

You've explained to ChatGPT that you like to include reflection questions in your assignments, so it will automatically add reflection questions to future assignments.

You'll be able to control how you use memory. This is pretty interesting. There will be a "Personalization" setting that allows you to turn memory on and off. This will be useful when you're doing some atypical tasks and you don't want them to mess up ChatGPT's memory. (Wouldn't it be nice to have a "turn off memory" feature for our brains?) There will also be a feature that lets you tell ChatGPT to forget something. The screenshot below shows the Manage Memory feature.

Another cool feature is "temporary chat." Basically, a temporary chat doesn't use memory, but they also won't appear in your chat history, which might be a problem.

It seems like memory is the next logical step from custom instructions feature, which is available to Pro users. Custom instructions let you give ChatGPT persistent instructions that apply to all conversations. Here is part of my custom instructions:

Respond as a very knowledgeable, trusted advisor and assistant. Responses should be fairly detailed. I would like ChatGPT to respond as a kind, but honest colleague who is not afraid to provide useful critique.

Higher ed professionals will need to be careful about data privacy, especially student data. There are ways to mask personally identifiable information, but I still advise caution. Your school may have policies that restrict what you should put into generative AI. Always assume that whatever you put into a generative AI tool will be used for training the models.

The custom instructions are pretty useful, so I expect memory will be as well ... if I ever get access.

There's a lot more information in OpenAI's blog post, so I encourage you to check it out: https://openai.com/blog/memory-and-new-controls-for-chatgpt

OpenAI's Sora is scary good

It's been a huge couple of weeks in the world of generative AI. (It seems like there are a lot of huge weeks for generative AI.) First Google announces major new AI models, then OpenAI steals the spotlight with Sora (https://openai.com/sora). According to Sora's website, "Sora is an AI model that can create realistic and imaginative scenes from text instructions." Based on the demo videos on the Sora website, holy cow does it live up to that promise. Seriously, what Sora produces is unbelievable.

What blows me away is the simplicity of the prompts used to create the demo videos. Here's the prompt that created this video (https://openai.com/sora?video=gold-rush):

Prompt: Historical footage of California during the gold rush.

The video might not be cinema quality, but it's quite good. When this is released to the public, I can see it being used for a lot of b-roll footage. Sora may also be useful for spicing up lectures.

The prompts for other videos on Sora's website are more complicated, but none are particularly complex. Mostly, they're just decent descriptions of what the video should show.

There are some odd things about a few of the videos. For example, there's a great video of a woman walking down a Tokyo street. Every "person" in the video seems to have the same cadence to their walk. They're not perfectly in synch, but it's close enough to make the video seem a little odd. If you look closely enough, you can see little oddities in many of the videos, but you have consciously look for them; at least I did. Currently, Sora videos are limited to one minute, but that's likely to change in the future.

I really like that OpenAI includes some failures as well. For example, there's a video of a man running the wrong way on a treadmill. There's also a somewhat disturbing video of gray wolf pups that seem to be emerging from a single pup.

It's hard to tell what this means for higher ed at this point. I can see Sora being useful for training videos and I'm sure more clever people will find other ways to use Sora's capabilities. Regardless, it's a fascinating new AI tool that shows cutting edge capabilities.

I can see Sora and similar tools being a bit of a time sink. The idea of creating cool new images and videos to add to our websites, lectures, and presentations is seductive, but I can see myself wasting hours on something that may not make much of a difference. As I've said before, beware the generative AI rabbit hole.

By the way, Sora isn't available to the general public yet and it's unclear when it will be. The cynical folks among us might say that OpenAI timed their announcement to take attention away from Google's announcements. Who knows if that's true, but I do know that Sora is an interesting peek into what's coming. You really should spend a few minutes checking out the videos. They're pretty amazing. Here's the link again: https://openai.com/sora.